Certificate pinning is great in stone soup

[no description provided]In his "ground rules" article, Mordaxus gives us the phrase "stone soup security," where everyone brings a little bit and throws it into the pot. I always try to follow Warren Buffet's advice, to praise specifically and criticize in general.

So I'm not going to point to a specific talk I saw recently, in which someone talked about pen testing IoT devices, and stated, repeatedly, that the devices, and device manufacturers, should implement certificate pinning. They repeatedly discussed how easy it was to add a self-signed certificate and intercept communication, and suggested that the right way to mitigate this was certificate pinning.

They were wrong.

If I own the device and can write files to it, I can not only replace the certificate, but I can change a binary to replace a 'Jump if Equal' to a 'Jump if Not Equal,' and bypass your pinning. If you want to prevent certificate replacement by the device owner, you need a trusted platform which only loads signed binaries. (The interplay of mitigations and bypasses that gets you there is a fine exercise if you've never worked through it.)

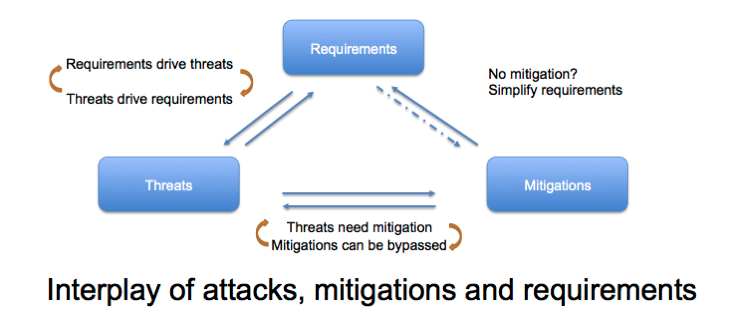

When I train people to threat model, I use this diagram to talk about the interaction between threats, mitigations, and requirements:

Is it a requirement that the device protect itself from the owner? If you're threat modeling well, you can answer this question. You work through these interplaying factors. You might start from a threat of certificate replacement and work through a set of difficult to mitigate threats, and change your requirements. You might start from a requirements question of "can we afford a trusted bootloader?" and discover that the cost is too high for the expected sales price, leading to a set of threats that you choose not to address. This goes to the core of "what's your threat model?" Does it include the device owner?

Is it a requirement that the device protect itself from the owner? This question frustrates techies: we believe that we bought it, we should have the right to tinker with it. But we should also look at the difference between the iPhone and a PC. The iPhone is more secure. I can restore it to a reasonable state easily. That is a function of the device protecting itself from its owner. And it frustrates me that there's a Control Center button to lock orientation, but not one to turn location on or off. But I no longer jailbreak to address that. In contrast, a PC that's been infected with malware is hard to clean to a demonstrably good state.

Is it a requirement that the device protect itself from the owner? It's a yes or no question. Saying yes has impact on the physical cost of goods. You need a more expensive sophisticated boot loader. You have to do a bunch of engineering work which is both straightforward and exacting. If you don't have a requirement to protect the device from its owner, then you don't need to pin the certificate. You can take the money you'd spend on protecting it from its owner, and spend that money on other features.

Is it a requirement that the device protect itself from the owner? Engineering teams deserve a crisp answer to this question. Without a crisp answer, security risks running them around in circles. (That crisp answer might be, "we're building towards it in version 3.")

Is it a requirement that the device protect itself from the owner? Sometimes when I deliver training, I'm asked if we can fudge, or otherwise avoid answering. My answer is that if security folks want to own security decisions, they must own the hard ones. Kicking them back, not making tradeoffs, not balancing with other engineering needs, all of these reduce leadership, influence, and eventually, responsibility.

Is it a requirement that the device protect itself from the owner?

Well, is it?