Threat Modeling Thursday: Machine Learning

For my first blog post of 2020, I want to look at threat modeling machine learning systems.[Update Jan 16: Victor of the Berryville Machine Learning Security blog has some interesting analysis here. That brings up a point I forgot to mention here: it would be great to name and version these models so we can discuss them more precisely.]

Microsoft recently released a set of documents including "Threat Modeling AI/ML Systems and Dependencies" and "Failure Modes in Machine Learning" (the later also available in a more printer-friendly version at arxiv.). These build on last December's "Securing the Future of Artificial Intelligence and Machine Learning at Microsoft."

First and foremost, I'm glad to see Microsoft continuing its long investment in threat modeling. We cannot secure AI systems by static analysis and fuzz testing alone. Second, this is really hard. I've been speaking with the folks at OpenAI (eg, "Release Strategies and theSocial Impacts of Language Models,") and so I've had firsthand insight into just how hard a problem it is to craft models of 'what can go wrong' with AI or ML models. As such, my criticisms are intended as constructive and said with sympathy for the challenges involved.

In "Securing the Future," the authors enumerate 5 engineering challenges:

- Incorporating resilience and discretion, especially as we handle voice, gesture and video inputs.

- Recognizing bias.

- Discerning malicious input from unexpected "black swans".

- Built-in forensic capability.

- Recognizing and safeguarding sensitive data, even when people don't categorize it as such

I would have liked to see the first two flow more into this new work.

In "Failure Modes," the authors combine previous work to create a new set of 11 attacks and 6 failures. The attacks range from "perturbation attacks" to "Attacking the ML supply chain" or "exploiting software dependencies." I like the attempt to unify the attacks and failure modes into a single framework. I do think that the combination of attacks calls out for additional ways to slice it. For example, the people who are concerned about model inversion are likely not the same as those who are going to worry about software dependencies, unless there's a separated security team. (See more below on the threat modeling document, but in general, I've come to see failure to integrate security into mainline development as an inevitable precursor to insecure designs, and conflict over them.)

One of the ways in which the Failure Modes document characterizes attacks is a column of "Violates traditional notion of access/authorization," or "Is the attacker [technologically] misusing the system?" This column is a poster child for why diagrams are so helpful in threat modeling.

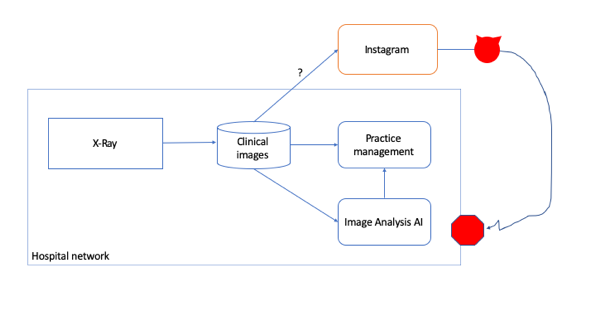

For example, the very first filled out attack (S.1, Perturbation) has a scenario of "Noise is added to an X-Ray image, which makes the predictions go from normal scan to abnormal," and "no special privileges are required..to perform the attack." My mental model of an X-Ray analysis system is roughly sketched here:

Even if the X-Ray file goes to Instagram, and even if that upload is a DICOM file rather than a png, once the attacker has tampered with it, they can't re-insert the image without special privileges to write to the clinical images data store. Additionally, my take is that the attacker is engaged in at least one traditional technical misuse (tampering). Now, generally, I would not expect a STRIDE-centered analysis to result in discovery of perturbation attacks without special training for the analysts. However, I do think that showing the system model under consideration, and the location of the attackers, would clarify this part of the model. Another approach would be to precisely describe what they mean by 'misuse' and 'traditional notions.'

That brings me to the third document, "Threat Modeling AI/ML Systems," which "supplements existing SDL threat modeling practices by providing new guidance on threat enumeration and mitigation specific to the AI and Machine Learning space." I would expect that the documentation would tie to current practices are, and much of it seems to be refinements to the "diagram" and "identify" steps, along with "changing the way you view trust boundaries," and a deep focus on reviews.

ML-specific refinements to diagramming and enumeration are helpful. For example, questions such as "are you training from user-supplied inputs?" seem to be re-statable as "did you diagram? ("Create a data flow diagram that includes your training data?"). Threat identification seems to include both the 11 specific attacks mentioned above, and "Identify actions your model(s) or product/service could take which can cause customer harm online or in the physical domain." I like the implied multi-layered investigation: one level is very generalized, the other very specific. We might call that a "multi-scale view" and that's an approach I haven't seen explored much. At the same time, the set of review questions for identifying customer harm seems very Tay-focused: 3 of the 8 questions refer to trolling or PR backlash. I am surprised to see very little on bias in either the review questions, attacks or unintentional failures. Biases, like racial differences in facial recognition effectiveness, or rating racially stereotyped names being rated differently in a resume screening app, are an important failure mode, and might be covered under 'distributional shifts.' But bias can carry from training data which is representative, and so I think that's a poor prompt. It's also possible that Microsoft covers such things under another part of the shipping process, but a little redundancy could be helpful.

The "change the way you view trust boundaries" section puzzles me. It seems to be saying "make sure you consider where your training data is coming from, and be aware there are explicit trust boundaries there." I'm unsure why that's a change in how I view them, rather than ensuring that I track the full set.

Lastly, a lot of the 'threat modeling' document is focused on review and review questions. I've written about reviews versus conversations before ("Talking, Dialogue and Review") and want to re-iterate the importance of threat modeling early. The heavy focus on review questions implies that threat modeling is something a security advisor leads at the end of a project before it goes live, and it would be a shame if that's a takeaway from these documents. The questions could be usefully reframed into the future. For example:

- If your data is poisoned or tampered with, how will you know?

- Will you be training from user-supplied inputs?

- What steps will you take to ensure the security of the connection between your model and the data?

- What will you do to onboard each new data source? (This is a new question, rather than a re-frame, to illustrate an opportunity from early collaboration.)

Again, the question of how we can analyze the security properties of a ML system before that system is fielded is an important one. Very fundamental questions, like how to scope such analysis remain unanswered. What the 'best' form of analysis might be, and for which audience, remains open. Is it best to treat these new attacks as subsets of STRIDE? (I'm exploring that approach in my recent Linkedin Learning classes). Alternately, perhaps it will be best, as this paper does, to craft a new model of attacks.

The easiest prediction is that as we roll into the 2020s these questions will be increasingly important for many projects, and I look forward to a robust conversation about how we do these things in structured, systematic, and comprehensive ways.

Update, Feb 28: See also BIML Machine Learning Risk Framework.