Threat Modeling & IoT

[no description provided]Threat modeling internet-enabled things is similar to threat modeling other computers, with a few special tensions that come up over and over again. You can start threat modeling IoT with the four question framework:

- What are you building?

- What can go wrong?

- What are you going to do about it?

- Did we do a good job?

But there are specifics to IoT, and those specifics influence how you think about each of those questions. I'm helping a number of companies who are shipping devices, and I would love to fully agree that "consumers shouldn't have to care about the device's security model. It should just be secure. End of story." I agree with Don Bailey on the sentiment, but frequently the tensions between requirements mean that what's secure is not obvious, or that "security" conflicts with "security." (I model requirements as part of 'what are you building.')

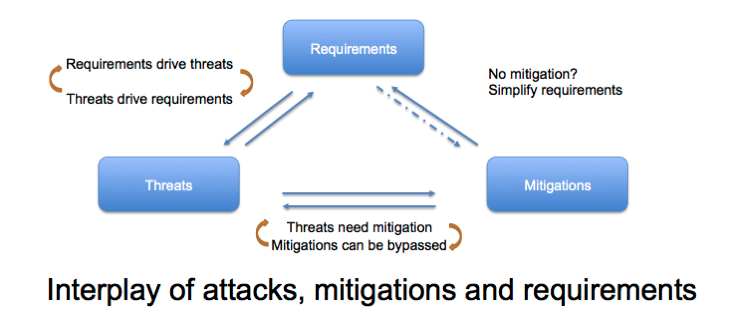

When I train people to threat model, I use this diagram to talk about the interaction between threats, mitigations, and requirements:

The interaction has a flavor in working with internet-enabled things, and that interaction changes from device to device. There are some important commonalities.

When looking at what you're building, IoT devices typically lack sophisticated input devices like keyboards or even buttons, and sometimes their local output is a single LED. One solution is to put a web server on the device listening, and to pay for a sticker with a unique admin password, which then drives customer support costs. Another solution is to have the device not listen but to reach out to your cloud service, and let customers register their devices to their cloud account. This has security, privacy, and COGS downsides tradeoffs. [Update: I said downsides, but it's more a different set of attack vectors become relevant in security. COGS is an ongoing commitment to operations; privacy is dependent on what's sent or stored.]

When asking what can go wrong, your answers might include "a dependency has a vulnerability," or "an attacker installs their own software." This is an example of security being in tension with itself is the ability to patch your device yourself. If I want to be able to recompile the code for my device, or put a safe version of zlib on there, I ought to be able to do so. Except if I can update the device, so can attackers building a botnet, and 99.n% of typical consumers for a smart lightbulb are not going to patch themselves. So we get companies requiring signed updates. Then we get to the reality that most consumer devices last longer than most silicon valley companies. So we want to see a plan to release the key if the company is unable to deliver updates. And that plan runs into the realities of bankruptcy law, which is that that signing key is an asset, and its hard to value, and bankruptcy trustees are unlikely to give away your assets. There's a decent pattern (allegedly from the world of GPU overclocking), which is that you can intentionally make your device patchable by downloading special software and moving a jumper. This requires a case that can be opened and reclosed, and a jumper or other DFU hardware input, and can be tricky on inexpensive or margin-strained devices.

That COGS (cost of goods sold) downside is not restricted to security, but has real security implications, which brings us to the question of what are you going to do about it. Consumers are not used to subscribing to their stoves, nor are farmers used to subscribing to their tractors. Generally, both have better things to do with their time than to understand the new models. But without subscription revenue, it's very hard to make a case for ongoing security maintenance. And so security comes into conflict with consumer mental models of purchase.

In the IoT world, the question of did we do a good job becomes have we done a good enough job? Companies believe that there is a first mover advantage, and this ties to points that Ross Anderson made long ago about the tension between security and economics. Good threat modeling helps companies get to those answers faster. Sharing the tensions help us understand what the tradeoffs look like, and with those tensions, organizations can better define their requirements and get to a consistent level of security faster.

I would love to hear your experiences about other issues unique to threat modeling IoT, or where issues weigh differently because of the IoT nature of a system!

(Incidentally, this came from a question on Twitter; threading on Twitter is now worse than it was in 2010 or 2013, and since I have largely abandoned the platform, I can't figure out who's responding to what. A few good points I see include: