C2PA Threat Modeling

What can we learn from the C2PA security considerations document?

The Coalition for Content Provenance and Authenticity (C2PA) has released a “Security Considerations” document, which is pretty much a threat model, and I want to use it for a Threat Model Thursday post. As always, my goal is to analyze the document to see what we can learn, and even engage in criticisms, which are intended to be helpful.

C2PA is an industry coalition focused on the threat of AI misinformation by creating ways to authenticate the images that haven’t been altered, and by showing what alterations have been performed on an image.

There is a lot to like here. The document is generally easy to read, it starts off with a methodology overview, which is great. I’d like to have seen more specifics about process choices (for example, did they ever create a data flow diagram, or do a full STRIDE analysis or create a “kill chain?”) I think my understanding is “we brought together really smart people, and they worked it out as they went.” In a process like this, where a presumably group of experts come together across time and versions of the system, both changing with time, it’s nice to express how they worked on it.

What are we working on?

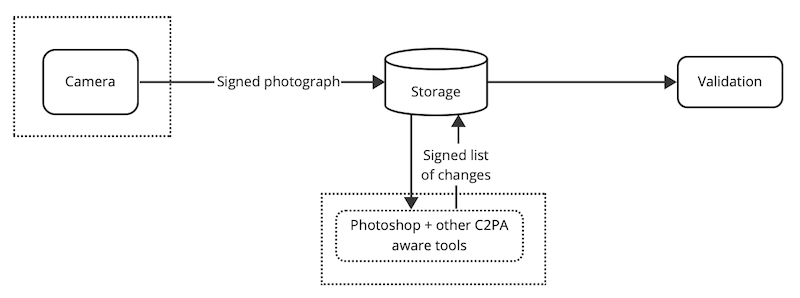

It would be good to have a graphic representation of the system as they see it. There are some in the parent doc, and I will admit to not having read the 172 pages, but Figure 3 (Elements) and Figure 13 (Entities) are not helpful-to-me overviews. My swag at a representation is above.

The way it’s represented might be a data flow diagram, it might be some other form of diagram to concisely show the relationship between parties. And yes, my ”Signed photograph” is a dramatic simplification of their manifest with its signature, claims and assertions. Models simplify, and that’s why we use them.

What can go wrong?

Section 3, the security model is part of “what can go wrong,” and is tied to Integrity, Availability and Confidentiality (in that order), and protection of personal information is in a separate section. I would have liked to see explicit discussion of the non-repudiation property here.

Section 4 is labeled as a threat model, and it starts off with assumptions. Nice! All too rare to see this. Threats are grouped by goals (aka impact), and each has a specific threat, with description, pre-requisites, impact analysis, and security guidance (which is akin to mitigations, for a standards document). Without trying to re-do the work, it seems reasonably complete and communicative.

I’d like to see linkability considered as a first-class threat: Being able to determine that the same person took these two photos is meaningful for journalists and activists. How to handle linkability is complex. A journalist may want all their news photos to be seen as a set, and not want their family photos taken with the same camera at home, to be in that set.

I’d also like to see an “accepted dangers” section. For example, is the threat of confusion between one John Smith and another in scope? What about confusion between johnsmith@example.org and johnsmith@example.com? A standard probably can’t address the former, but can mandate that compliant implementations display both human-readable and globally unique names, and talk about the levels of validation of those names. (Valid when the account was created? Valid when the image was signed?)

At least one threat is the violation of an assumption. Many assumptions are violations of threats, and methodology designers should consider if they need to be explicitly listed and consistency. (4.2.2.2, spoofing signed C2PA via a stolen key violates assumption 4.1, “Attackers do not have access to private keys.”) I think the rest of the assumptions don’t list the threats that manifest as they’re violated.

What are we going to do about it?

There are no separated out list of “What are we going to do about it?” The way a document like this considers ‘what to do’ is generally to build the defenses it can into the specification and here the form is much closer to, say, IETF RFCs than it is to Jira tickets with mitigations. It would be nice to see an implementor-focused list of threats and required security controls. That is, if you make cameras, ensure you address threats like key extraction. If you’re making something editing software, do these other things... The C2PA group has clearly thought a lot about those, summarizing them would be great.

Did we do a good job?

The relationship to other C2PA documents is complex. I’d have liked a cleaner relationship between the Technical Specification and the Security Considerations, because the Spec contains a section (18, Information Security) that has threats and security considerations and also a section on harms, misuse and abuse that branches into yet another document, C2PA Harms Modelling, which seems to have a related set of issues. I think the way in which they fit together is Security Considerations is threats to the system, while Harms is threats via the system.

Final thoughts

As a nit, the word “asset” is confusing, even though they explain their unusual use. They’d have been better off with “image,” even though asset refers to more than images.

I've spoken to a person involved with the process to help me understand what's going on, all remaining mistakes are my own.