AI Insurance Won't Save You

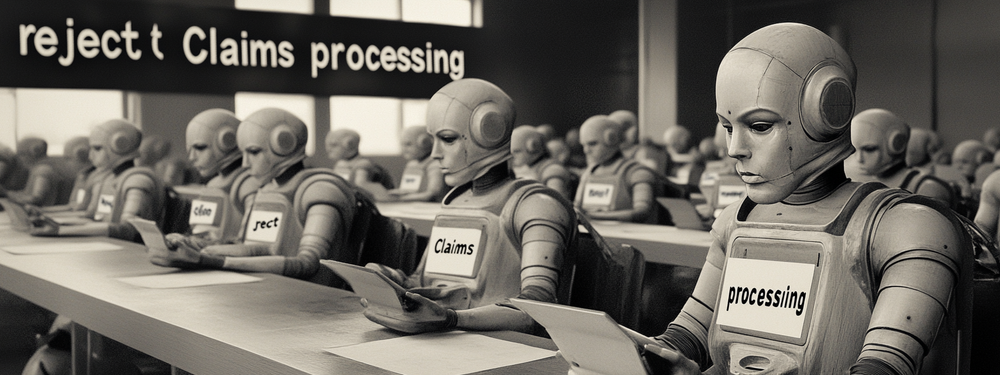

LLM Insurance is, and will remain, a great source of insurer profits.

There’s press about AI insurance, and I don’t want to critique any specific firm, I’d like to offer a prediction: No customer will ever see a payout. We can see the dynamic that’s emerged in cybersecurity and learn from it.

Cybersecurity insurance

First and foremost, in cybersecurity, insurers generally have little idea of what leads to bad problems, and so premiums have been consistently mis-priced as they've learned, often draining reserves, after which premiums shot sky high.

The only insurer I see thoughtfully managing this is Coalition, but even they have lots of problems like attribution, of the form “does our insured control this resource?” For example, late one Saturday night in the Log4Shell mess, they sent me an email saying Shostack + Associates had log4shell exposure. (We were, at that time, a Coalition customer.) I emailed back because I was pretty sure they were wrong. A week later, I heard back that they were indeed wrong, but nothing about the source of the error. I assume that they’d attributed a real vuln at one of our cloud providers to us, but I don’t know.

Back to the general case, cyber insurers have a set of exceptions that lead to policies not paying out, including act of war, but much more importantly, misrepresentation.

A cynical way to think about this is because insurance is competitive, the way new policies are sold is that they ask a bunch of vague questions. Checking your answers is expensive, and so they they don't check unless you make a claim. If they find errors, omissions, misrepresentations, etc, they’ll choose to not pay out, possibly reasonably. This is a variant of “no one is PCI complaint at the time of a breach.”

How does this translate to AI?

Insurance companies will rake it in. Execs at AI companies will make billions because they can sell more AI. The venture capitalists backing new insurance businesses will make piles of cash. The companies relying on insurance to “manage risk” will get “disrupted” and be shocked when they get no payout. Courts and insurance commissioners will find that enterprises buying AI insurance are “sophisticated actors” who should have foreseen these problems, and not force payouts. Consumers will get left with nothing.

But, I hear you asking: Won’t insurers want to pay out to show they’re not selling team-moral-hazard journeys, and so push for effective controls? Yes, Virginia, there is a Santa Claus.

More seriously, it’s not inconcievable, except that by design, LLMs hallucinate, and we don’t yet know how to effectively control that (although, some patterns are starting to emerge.) In my RSA talk with Tanya Janca, we talked about code and data being intermingled, and that’s a fundamental issue, not one where today’s practices can reliably avert problems. (See about 31 minutes in.)

Should my company buy AI insurance?

No.

Why are you posting this now?

This was written in May, and when I went to update it with a link to Insurers balk at paying out huge settlements for claims against AI firms, I discovered that it remained in a draft state.

[Updated Nov 23 to add a techcruch story AI is too risky to insure, say people whose job is insuring risk: “Major insurers including AIG, Great American, and WR Berkley are asking U.S. regulators for permission to exclude AI-related liabilities from corporate policies.”]This post is not intended to disparage Coalition. A training customer, in the insurance business, insisted we get cyber insurance. When, at no fault of our own, rates shot up to about 20% of the value of the contract, that customer declined to accept us passing on the increased costs to meet their needs.