Threat Modeling Google Cloud (Threat Model Thursday)

NCC has released a threat model for Google Cloud Platform. What can it teach us?

In Threat Modelling Cloud Platform Services by Example: Google Cloud Storage Ken Wolstencroft of NCC presents a threat model for Google Cloud Storage, and I’d like to take a look at it to see what we can learn. As always, and especially in these Threat Model Thursday posts, my goal is to point out interesting work in a constructive way.

Let me start by saying that I love that there’s a methodology section at the top. I’ll add that not everything in the document is introduced in methodology, and I’ll list those as we go. The approach is reasonably and implicitly tied to the Four Question Frame, so I’ll use that to organize this post.

What are we working on

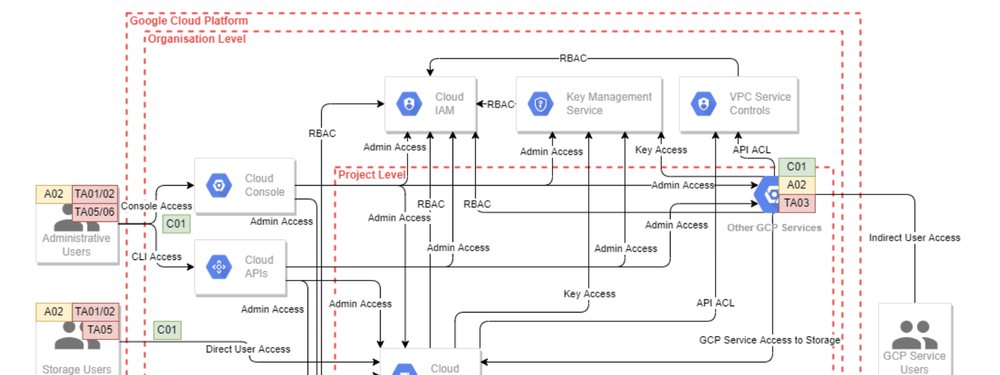

There are three views into what NCC looks at. They are “key features”, a diagram and a list of assets. The relationship between the feature list and the diagram is not stated. I think that the key features includes both processes and data flows shown in the diagram. The diagram also includes decoration for assets, threat actors, and “default base security controls.” The last includes https, which is, surprisingly, not applied everywhere (or not shown as applying everywhere). Interestingly, the diagram shows three nested boundaries, “Google Cloud Platform”, “Organization level” and “project level”, but nothing is shown in the cloud platform level.

The diagram could be improved by higher contrast text. The gray on white is hard on my eyes. I don’t want to make too much of this, but making our diagrams easy to read so we can spend our mental energy on other things pays off.

The list of threat actors includes both “internal attacker,” “internal malicious user,” and Google engineers. I think that means the first two are internal to the GCP customer. It also includes compromised services? (The list inexplicably stops before listing TA007, James Bond.)

What can go wrong?

This starts with a section “attack goals”, which nicely acknowledges that “An attacker’s motives and goals are often hard to accurately predict...” 5 of the 6 goals are closely aligned with STRIDE (lacking repudiation) and adding a “host malicious content,” a nice illustration of the danger of limiting oneself to STRIDE. That said, repudiation does show up, for example in T11, and 12.

This is followed by a list of “Potential System Weaknesses” and a “List of Potential Threats”, an interesting split. Here, weaknesses are “opportunities for stronger security configurations,” and threats seem to be threat actions/impacts. T02 Guessing of Google Cloud Platform credentials" is an action, “T04 Authenticated access to Google Cloud Storage bucket” is an impact. Also, here, the weakness of STRIDE as a categorization shines through — many threats are listed in multiple categories. (I prefer to use STRIDE to prompt my thinking, rather than categorize what I find.)

What are we going to do about it? (threat mitigation)

There’s a nice list of controls, motivated by the threats, and traceable to the threats in a nice way. They re-iterate the threat as a headline and propose many controls that may help mitigate it. I'm somewhat surprised that they've stopped assigning short tags here (there's no C03, C04...) and those seem both natural and a useful way to assess what controls would work most broadly.

Did we do a good job?

The explicit methodology section isn’t complemented by an explicit assessment of the work, but rather by a conclusion, which is that threat modeling does find interesting weaknesses that can be addressed by appropriate use of features.