Strategy for threat modeling AI

Clarifying how to threat model AI

There’s an inordinate amount of confusion around threat modeling and AI. In this post, I want to share some of the models I’m currently using to simplify and focus conversations into productive analysis. Like everything touched by LLMs, they're rapidly changing, and so the images have dates embedded.

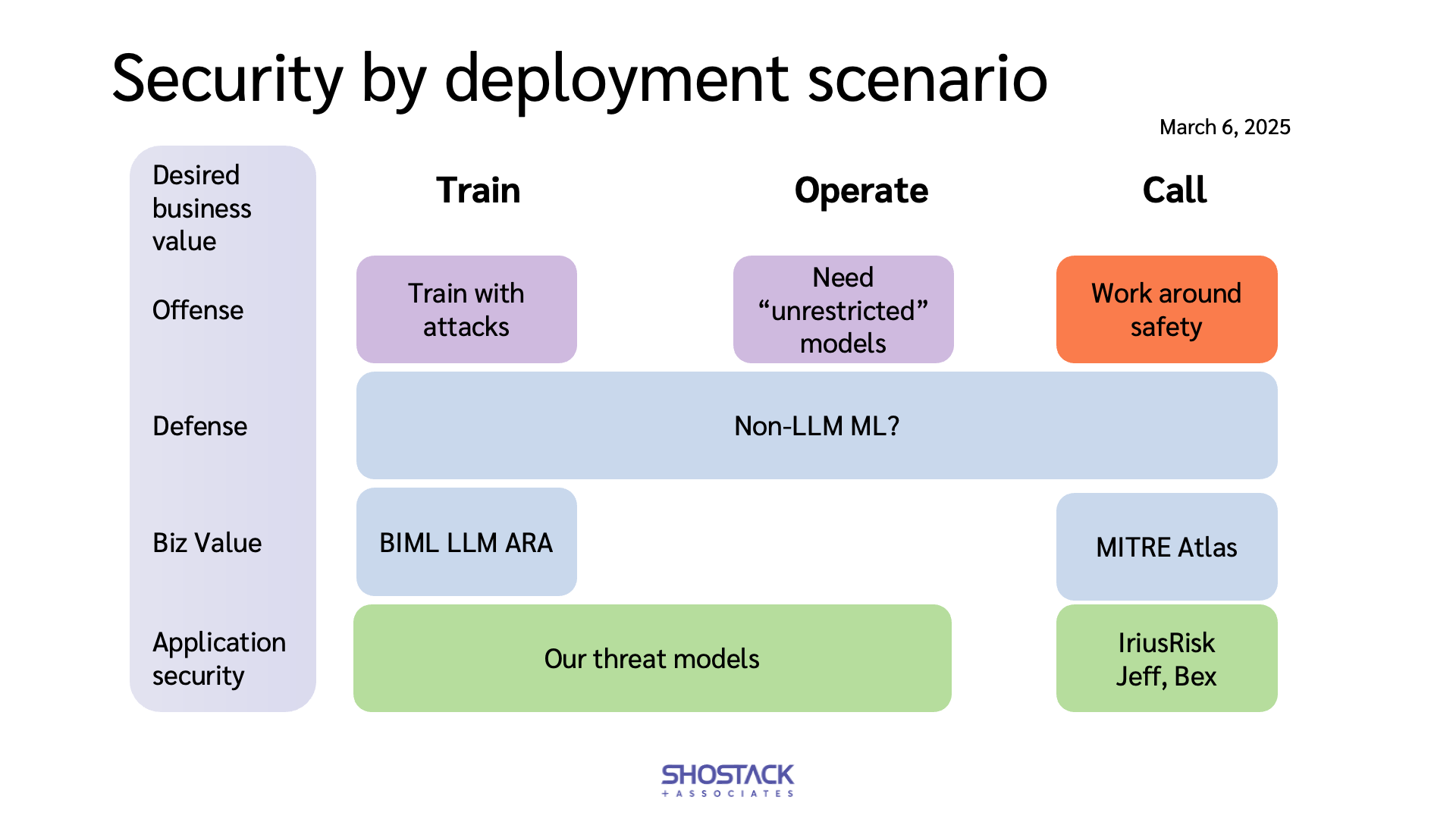

First, there are three main ways people run LLMs:

- We train our own model (and then deploy it).

- We operate a model (eg, from Huggingface).

- We call a model via API.

There are sub-patterns within each of these, but whichever scenario you're in, or a given project is in, security is impacted by AI in four types of scenarios:

- AI for offense (write me a phishing campaign, make a deepfake< video).

- AI for defense (spam filters, anti-fraud, etc)

- AI for business value (“Here's our AI chatbot to help you!”)

- AI for software development, including AI for securing code that you write, possibly with AI help.

The way you think about security depends on which of these describes what you’re working on, and more, it depends on the intersection of the two. So for example, if you want to train an LLM for offense, you want a dataset of successful attacks. If you want to call someone else’s LLM, you need to bypass their safety tools. (Google recently released an in-depth Adversarial Misuse of Generative AI that covers how attackers are using their LLMs.)

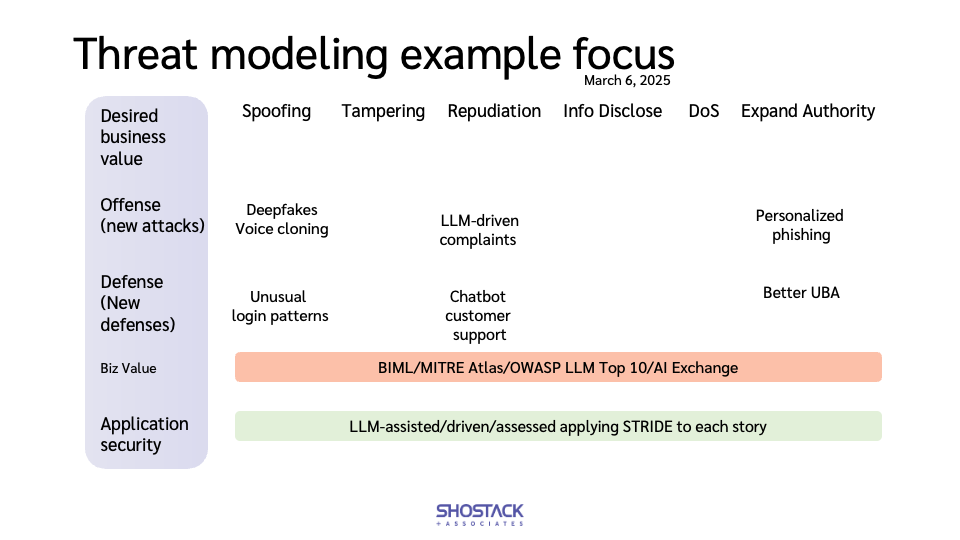

We can extend how we use structures like STRIDE to assess ‘how does AI change what can go wrong as we’re training an LLM’ Here, I find the BIML ARA for LLMs is helpful. It has a heavy focus on model training. In contrast, https://atlas.mitre.org/matrices/ATLAS seems more focused on those calling or operating an LLM.

We can also go deeper. The offense scenario changes the way you threat model authN. AI for defense may change ‘how are we going to detect if X is happening.’ If you operate an LLM that’s customer-exposed for business value, you need to put safety tools in place, while if you call someone else’s, you have to worry about information disclosed in each call.

There's a nearly infinite set of AI risk management guidance, most of which is literally worth less than the time it takes to read it, because it's one or more of:

- Unclear or even confused about what scenario it's talking about.

- Pablum that says nothing.

- Written by a committee that either outright contradicts itself or won't prioritize their kitchen-sink worth of advice.

- Defines unrealistic tasks (“Regularly monitor and review the LLM outputs” is, empirically, something businesses are not going to fund) or tasks that can't be completed and assigns them to a reader who may well have no more information than the authors (“Estimate the likelihood that an attacker will...”)

To be fair, it’s hard. AI can be so broadly transformational that the list or risks can be nearly endless.

If you’d like help securing your LLMs, why not reach out via our contact form?

Midjourney, “a photograph of a robot, sitting in a library with high ceilings, frantically juggling glowing balls.”