Security Rarely Flows Downhill

[no description provided]I was recently in a meeting at a client site where there was a lot of eye rolling going on. It's about not understanding the ground rules, and one of those groundrules is that security rarely flows downhill.

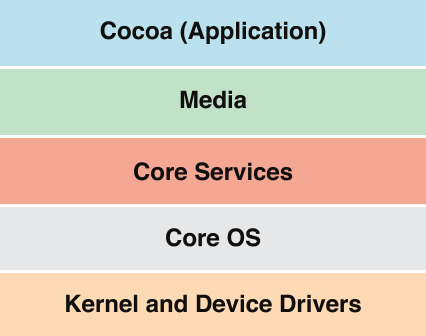

That is, if you're in a stack like the one to the right, the application is vulnerable to the components underneath it. The components underneath should isolate themselves from (protect themselves against) things higher in the stack.

I can't talk about the meeting in detail (client confidentiality), so I'm going to pick an article I saw where some of the same thinking. I'm using that article as a bit of a straw man. This article was convenient, not unique. These points are more strongly made if they are grounded in real quotes, rather than ones I make up.

“The lack of key validation (i.e. the verification that public keys are not invalid) is therefore not a major security risk. But I believe that validating keys would make Signal even more secure and robust against maliciously or accidentally invalid keys,” the researchers explained.

In this farfetched example, researchers explain, communications would be intentionally compromised by the sender. The goal, could be to give the message recipient the appearance of secure communications in hopes they may be comfortable sharing something they might not otherwise.

...

“People could also intentionally install malware on their own device, intentionally backdoor their own random number generator, intentionally publish their own private keys, or intentionally broadcast their own communication over a public loudspeaker. If someone intentionally wants to compromise their own communication, that’s not a vulnerability,” Marlinspike said. (I'm choosing to not link to the article, because, I don't mean to call out the people making that argument.)

So here's the rule: Security doesn't flow downhill without extreme effort. If you are an app, it is hard to protect the device as a whole. It is hard to protect yourself if the user decides to compromise their device or mess up their RNG. And Moxie is right to not try to improve the security of Android or IoS against these attacks: it's very difficult to do from where he sits. Security rarely flows downhill.

There are exceptions. Companies like Good Technologies built complex crypto to protect corporate data on devices that might be compromised. And best I understand it, it worked by having a server send a set of keys to the device, and the outer layer of Good decrypted the real app and data with those keys, then got more keys. And they had some anti-debugging lawyers in there (oops, is that a typo?) so that the OS couldn't easily steal the keys. And it was about the best you could do with the technology that phone providers were shipping. It is, netted out, a whole lot more fair than employers demanding the ability to wipe your personal device and your personal data.

So back to that meeting. A security advisor from a central security group was trying to convince a product team of something very much like "the app should protect itself from the OS." He wasn't winning, and he was trotting out arguments like "it’s not going to cost [you] anything." But that was obviously not the case. The cost of anything is the foregone alternative, and these "stone soup improvements" to security (to borrow from Mordaxus) were going to come at the expense of other features. Even if there was agreement on what direction to go, it was going to another few meetings to get these changes designed, and then it was going to cost a non-negligible number of programmer days to implement, and more to test and document.

That's not the most important cost. Far more important than the cost of implementing the feature was the effort to get to agreement on these new features versus others.

But even that is not the most important cost. The real price was respect for the central security organization. Hearing those arguments made it that much less likely that those engineers were going to see the "security advisor," or their team, as helpful.

As security engineers, we have to pick battles and priorities just like other engineers. We have to find the improvements that make the most sense for their cost, and we have to work those through the various challenges.

One of the complexities of consulting is that it can be trickier to interrupt in a large meeting, and you have less ability to speak for an organization. I'd love your advice on what a consultant should do when they watch someone at a client site demonstrating skepticism. Should I have stepped in at the time? How? (I did talk with a more senior person on the team who is working the issue.)