Secure Boot and Secure by Design

The failure to secure boot keys should be a bigger deal.

In case you missed it, Ars Technica has a story, Secure Boot is completely broken on 200+ models from 5 big device makers. The key* point is that “Keys were labeled "DO NOT TRUST." Nearly 500 device models use them anyway.” At some level, I get it. There’s a lot of work to do in shipping a big complex system, even if that big complex system is in a small form factor. But.

* Sorry. (Not sorry.)

Should a company shipping a cryptographic product realize they need to do something about the keys? I have a hard time with an answer other than “yes.” What that work is depends on the system, but they seem to have failed to look at a fundamental component that’s a key part of the boot process. Never mind “look carefully.”

Two of the companies which failed have taken CISA’s Secure by Design Pledge. (It’s not hard to figure out which, but my goal here is not to call them out or shame them.) Should they be penalized? Removed?

On one hand, obviously not. The Pledge has a set of specific actions, and they have not clearly failed to take any of those or violated the spirit of the points in the pledge.

On the other hand, they are clearly failing at secure by design. Are they failing to a degree where their failure constitutes, say, a deceptive practice or casts a shadow on the pledge? I plan to write a separate post about the market issues.

But we can use this to evaluate the Pledge, and I think it could be enhanced in three ways:

- First, the Pledge should require SBOM delivery. While secure boot has hardware components, an SBOM would at least improve awareness of what the manufacturer is shipping. (They presumably have an actual bill of materials, but it didn’t extend to software).

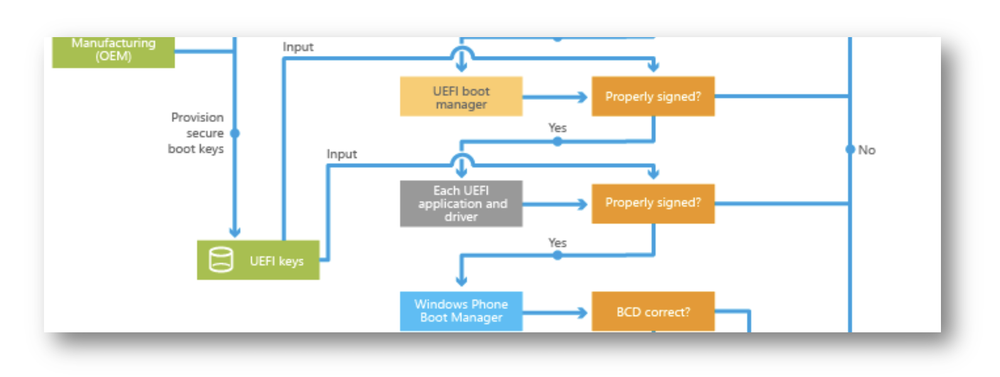

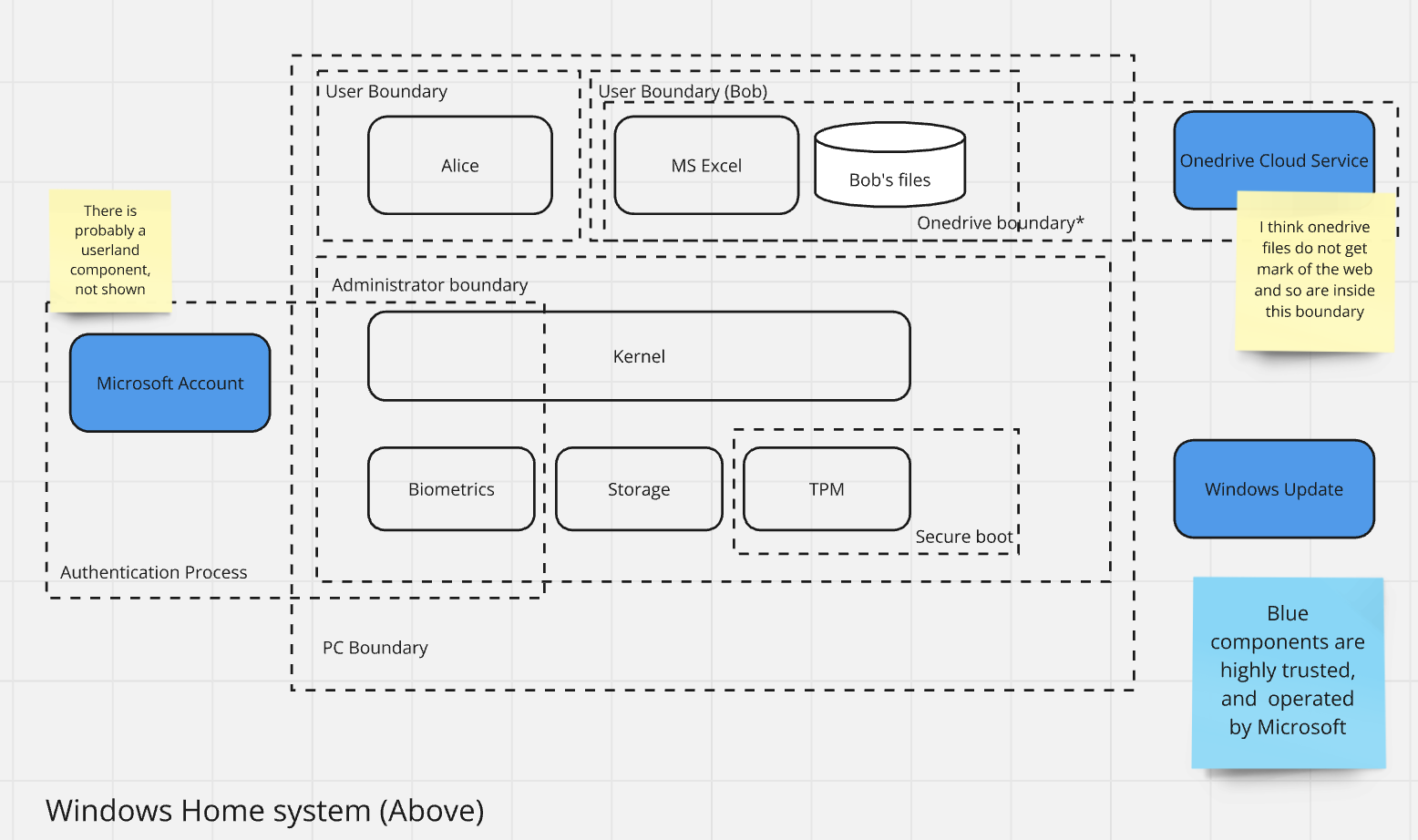

- Second, the Pledge should require that the manufacturer creates, maintains and shares a high level threat model showing product trust boundaries and external dependencies. For another conversation, I sketched the model below, which is incomplete, but shows how you can show a threat model in a way that shouldn’t trigger any pearl-clutching about attacker roadmaps. The Microsoft model used as a header would work if they added trust boundaries; the full model is linked below.

- Third, it should require a commitment to appropriate care in the creation of products. There will doubtless be people who’ll argue against this. What does due care mean? I mean it to be equivalent to the commitments you now need to make to sell to the Federal Government. (It is exceptionally tempting to add “or a super-set” but that opens the door to an argument against it, which isn’t worth it right now.)

Overall, I think that pledging to be Secure by Design is a public commitment that a company is making, and splitting hairs about the specifics they’ve pledged to is not a good look for anyone.

My sketch of a Windows threat model. This level of information expresses what the product is or does. (The link has a second example and a Miro board you can copy and build on.)

As an aside, Shostack + Associates has not taken the Pledge, and perhaps with a blog post like this, I should explain. Simply, the pledge is for software makers, and we don’t make (much) software. 99% of our delivery is on the platforms of much larger producers. We’ve never shipped a patch, have no vulns to disclose or get CVEs for, and any evidence of intrusion is at the control of Zoom, Slack and others, not us.

Image excerpted from Secure boot and device encryption overview. I like both the use of color to group the many “properly signed” boxes, and how they’re all on the right, leading to “boot failure.” (Not shown in my excerpt.)