GPT-3

The OpenAI chatbot is shockingly improved — its capabilities deserve attention.

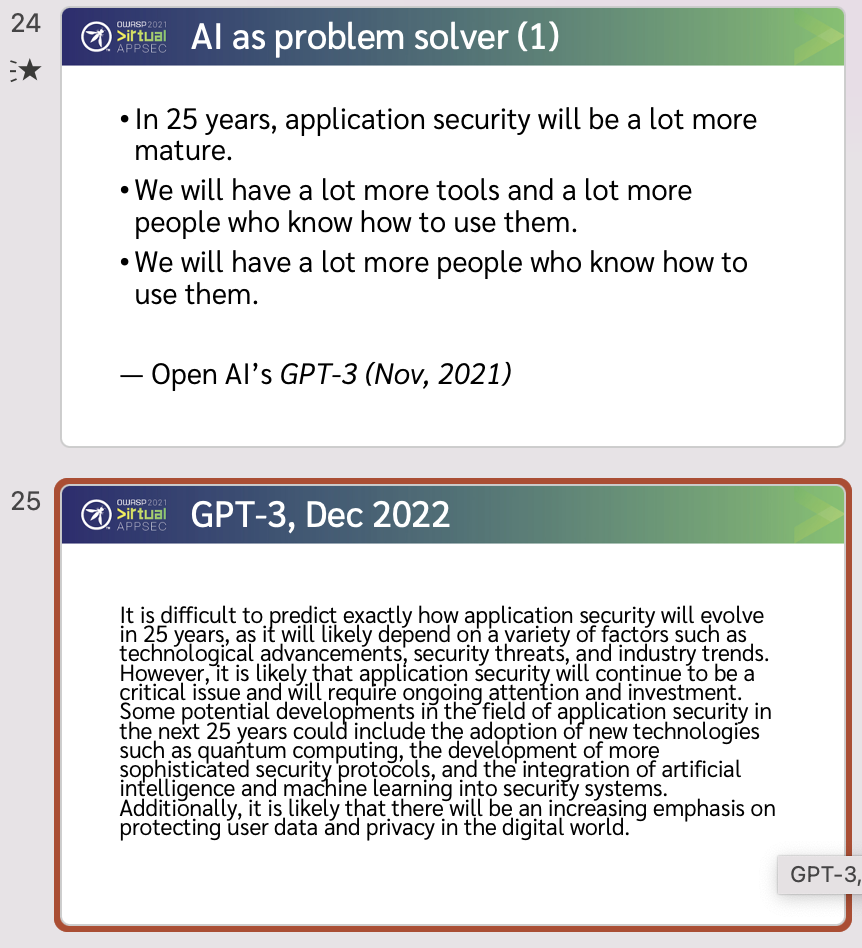

This week, it’s been hard to avoid text from OpenAI’s GPT-3 text generator, which has gotten transformationally better over the last year. Last year, as I prepared for my OWASP Appsec keynote (25 Years in AppSec: Looking Back, Looking Forward), I was given early access, and gave it the prompt “In 25 years, application security will be...” and, after filtering through some answers, it gave me some ok bullet points. This year, it gave me...something quite different, and I inserted the text into my slides:

The impact of freely available text that’s reasonably convincing is something that OpenAI (and others) have been thinking about, but it’s now viscerally here. A few interesting longreads I’ve come across are:

- On Bullshit, And AI-Generated Prose (Clive Thompson, Medium)

- OpenAI's new ChatGPT bot: 10 dangerous things it's capable of (Ax Sharma, Bleeping Computer)

- OpenAI’s New Chatbot Will Tell You How to Shoplift And Make Explosives (Janus Rose, Vice)

- Talking About Large Language Models (Murray Shanahan, Arxiv) makes the point that Large Language Models (including GPT-3) are really answering the question “what's the most common next token after my prompt?” (Added Dec 11)

- ML and flooding the zone with crap (Greg Linden, Geeking With Greg) points out that the majority is not always right, especially when there’s an incentive to manipulate appearances. (Added Dec 11)

- Machine Generated Text: A Comprehensive Survey of Threat Models and Detection Methods (Evan Crothers, Nathalie Japkowicz, Herna Viktor, Arxiv) an extensive survey, added Dec 11.

- A New Chat Bot Is a ‘Code Red’ for Google’s Search Business (Nico Grant, Cade Metz, New York Times) added Dec 22

- 11 Problems ChatGPT Can Solve For Reverse Engineers and Malware Analysts (Aleksandar Milenkoski & Phil Stokes, SentinelOne), but note that Jordan points out that “the diagram is very wrong...locals are too high (they go below the saved return address), saved registers are too low (they go above arguments), and who knows what's up with the padding bit it doesn't make sense at that location.”

- An A.I. Pioneer on What We Should Really Fear (David Marchese, NY Times) added Dec 28

Also, not a longread, but attributed to Andrew Feeney: