Threat Model Thursday: 5G Infrastructure

The US Government's lead cybersecurity agencies have released an interesting report, and I wanted to use this for a Threat Model Thursday, where we take a respectful look at threat modeling work products to see what we can learn.

The US Government's lead cybersecurity agencies (CISA, NSA, and ODNI) have released an interesting report, Potential Threat Vectors To 5G Infrastructure. (Press release), and I wanted to use this for a Threat Model Thursday, where we take a respectful look at threat modeling work products to see what we can learn.

The first thing I look for is a statement about who did the work and why. I think the report from a Threat Model Working Panel of the Enduring Security Framework of CIPAC, but then there's language like "identified by the ESF and 5G TM working panel... (emphasis added)" which implies its separate. Not a big deal, and in light of some of the ungrounded claims that 5G causes cancer, and the propensity of people to jump to death threats, I'm more accepting than normal of the report not having names on it.

The report lists a set of vectors (where the threats apply) and then threat scenarios. They have three main groups of vectors (things which are vulnerable to threats): policy and standards, supply chain and systems architecture. I think vectors are being used to address the question of where things can go wrong, and the vectors do relate to a scoping in an interesting way that's worth grappling with. (Nancy Leveson's work on control functions for development and operations seems related.) The grouping by vectors and scenarios is an interesting overall approach, and makes for a manageable sized report, which is exceptionally useful given the complexity of 5G.

This set of threat vectors, is similar to both "what are we working on" and "what can go wrong" and I think explicitly talking about those leads to useful results, which I'll discuss question by question.

What are we working on?

We should know what's in scope. Is it the networks? The radio networks, or something more broadly? Page 2 states "Additionally, 5G networks will use more ICT components than previous generations of wireless networks..." which sounds like the scope of the systems go deeper, and the glossary mentions core networks. Are end user devices in scope? Anything with a 5G chip? The accounting systems? The data gathered by 5G? "Telesurgical devices" are explicitly mentioned, are they in scope? (Why anyone would run a telesurgical device over a long-range radio, rather than switching to more reliable fiber as soon as economically feasible is beyond me. There are probably scenarios where it's helpful to have a device not tethered to fiber, but is the entire device in scope for this work, or is just the 5g modem in scope? Or neither, because end devices are not trusted?)

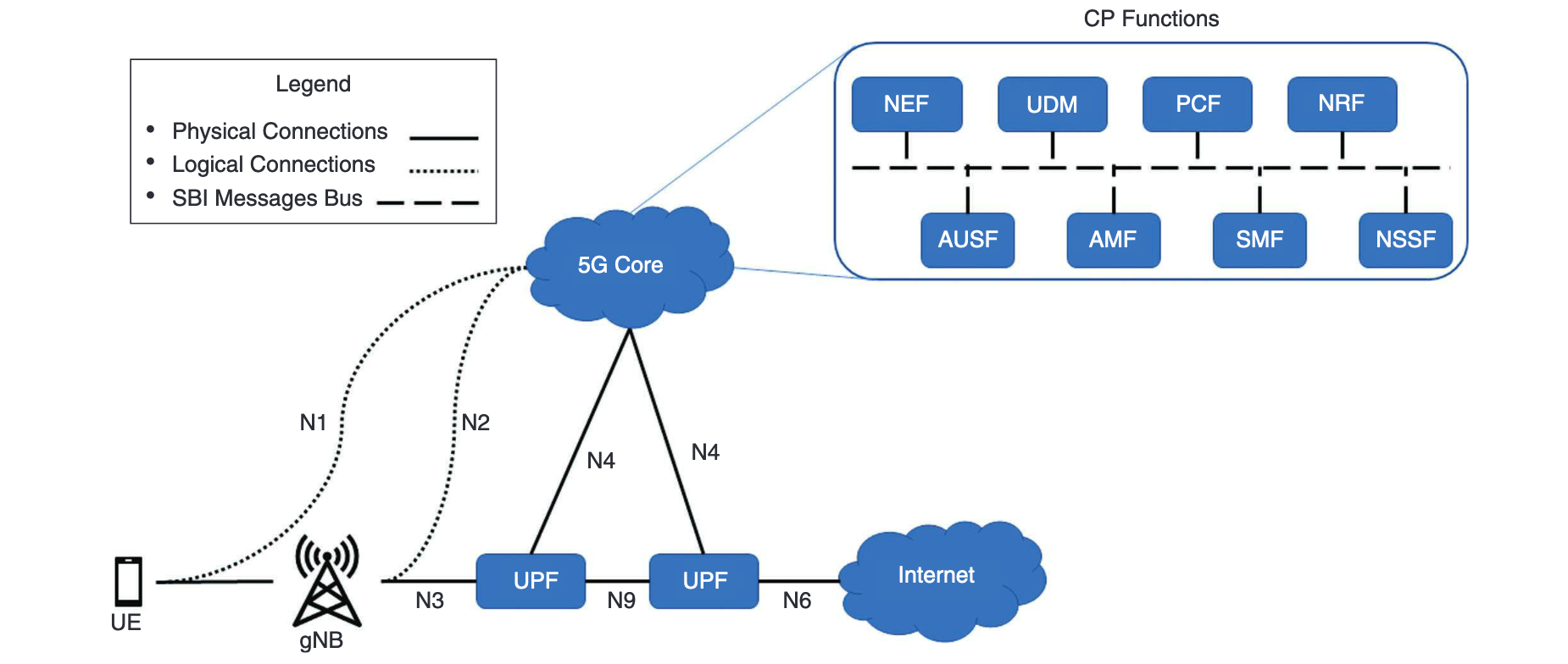

A diagram showing that scope would help. I'm borrowing one from An Attack Vector Taxonomy for Mobile Telephony Security Vulnerabilities for this post.

The diagram and discussion should be explicit about trust boundaries. What trusts what? For example, what's the trust relationship between User Equipment and other things? Missing boundaries may be a flaw in the design of 5G, rather than a flaw in the analysis. However, that should be called out by the people doing the analysis, rather than the person writing this meta-analysis.

An explicit statement of the scope of the work, and the trust boundaries that are supposed to delineate participants would be a great help. To be fair to the panel, cellular network systems are complex, and are made more complex because the people building them have these, ummm, unique perspectives, with terminology like "non-standalone network" and a "core network" which isn't the internet. Their baroque language and expansive documentation are both barriers. Nevertheless, knowing what's in what we used to call the "trusted compute base" is essential. We'll return to the concept of the TCB.

In fact, there's a lesson from Sir Tony Hoare: "there are two ways of constructing a software design. One way is to make it so simply that there are obviously no deficiencies and the other way is to make it so complicated that there are no obvious deficiencies." The 5G folks are clearly in the complexity camp, and that complexity dogs this analysis. Again, I want to be clear that I mean no disrespect to the folks doing the analysis work. Everyone would benefit from more layering and segmentation in cellular network designs, and treating systems like telesurgery or video streaming as services which run on top of the network layers. You know, the way the internet's layered architecture has enabled, video streaming to be added without any changes to the underlying layers. We'll come back to why that is so important when we get to what's being done about the problems.

What can go wrong?

The nature of the threats listed is unusual and worth some attention. They're grouped into scenarios, starting on page 5 with "nation state influence on 5G standards."

Let's return to that telesurgical device, mentioned earlier. Again, we have the idea that a surgical device will have 5G integrated into it, rather than modularized, accepted as if that's a reasonable future. The complexity, and associated lack of layering is a bug, not a feature. The device should distrust the network, and encrypt its data in ways that are opaque to the network, and apply trust from one endpoint to another, rather than decrypting it at various points within the system. Unfortunately, the cellular network folks hate that because they are stuck on the idea of smart networks and dumb end devices. (This is why hotspotting is broken on my phone: the cell company gets to send a "carrier settings" file and Apple trusts it, without ever letting me inspect or fix it.)

What are we going to do about it?

The report contains a good discussion of the dangers of the "optional controls" in the design, and I want to acknowledge that, and then go further.

Complexity is not only a threat to understanding, but also limits the ability of systems operators or users to deploy additional controls. In fact, implicit in the idea of mandatory and optional controls is that all the threats are known, and appropriate controls can be specified in advance. We can, and should, argue about how quickly threats evolve, and how much variation in operation is helpful. But we also know that there is distrust between the suppliers, operators and regulators, and it seems reasonable to think that we might want to layer additional controls, especially on the "ICT" componentry, the OT componentry, and the system integrity.

It used to be that some folks trusted manufacturers to prescribe the appropriate systems security; others relied on hardening guides. What I read from this is that independent hardening guides are not going to exist for these critical infrastructure components.

Did We Do a Good Job?

There's an interesting sentence in the intro "This product is [...] derived from the considerable amount of analysis that already exists on this topic, to include public and private research and analysis." Someone with time on their hands could find out what's new here, and get an understanding of what that private analysis focsed on, somewhat like how analysis of the old Data Encryption Standard (eventually) lead to an understanding of new forms of cryptanalysis that were not previously public.

There's a lot going on under the covers here about the role of China and Chinese companies in developing the standards, building, and in many cases, operating the equipment that makes up 5G. Frankly, there seems to be an element of surprise about what it's like when your technology is made in a country you don't trust – a situation that's been present for non-US companies for decades. The state of the art in systems security is that supply chains are trusted, in the sense that they can betray you. Chip makers and software makers can insert extra functionality in ways that are exceptionally hard to detect, and if detected, hard to distinguish from mistakes.

For many years, the strategy has been to address these via trusted manufacturers, using only cleared staff, but that approach has never been economical. This is why a small TCB is useful; SBOMs and MUDs will be as well. They are not perfect, but they will allow more focused analysis, easier detection of abnormal behavior, and better audits of what's actively deployed.

To sum up, looking at this report provokes an awful lot of thinking about what a threat model could or should be. (Sorry! Is it a comfort to know I cut half the words in editing?) My conviction that explicit system modeling and scoping are part of threat modeling, rather than an input to it, is strengthened by looking at "what are we working on?"