Information Security as an Evolutionary Arms Race – Research Collaborators Wanted

I’m starting on an academic-oriented research project and I’m looking for collaborators, contributors, reviewers, etc.

The topic is the arms race between attackers and defenders from the perspective of innovation rates and “evolutionary success” – the Red Queen problem (running just to stand still). Here’s a sample research question: “can bureaucracies (defenders) keep up with a decentralized black market (attackers)?”, and similar. Answering these research questions would have policy implications on the effectiveness of regulation/mandates vs. incentive-based approaches, R&D policy, etc.

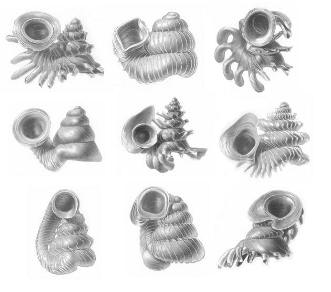

Sail shells from Borneo shaped by a Red Queen arms race with their main predator (a slug of the genus Atopos)

I want to focus primarily on theoretical models, but I’m also keen on grounding them in reality. If I can present some empirical data on the rate of innovation for various players as calibration, that would be superb.

On the theory side, I will be drawing from Evolutionary Ecology (host-parasite co-evolution, adaptive landscapes), Political Economy (models of *real* arms races), Computational Social Science (agent-based models, genetic algorithms, evolutionary game theory), and Economic-Engineering models of innovation and organization learning (risk/reward, optimal investment, etc.). I will also draw on “computable economics” that attempts to measure the information processing/learning capabilities of central planning vs. markets, etc.

Regarding empirical data, I would be interested in any of the following:

- Rate of innovation in the underlying information and IT environment

- What’s the half-life of the IT architecture in a large organization?

- What’s the product life for computing platforms?

- What’s the innovation rate for new forms of information or information standards (e.g. XML)?

- Rate of innovation in attacker tools, methods, and capabilities

- Timeline of major innovations (first appearance and widespread use)

- Time between discovery of vuln and widespread availability of exploit

- % of exploits that are Zero-day vs. known/resolved vulns

- Regime change in time series data that signals a major innovation (e.g. the phishing boom)

- Appearance rate of new monetization schemes, etc.

- Rate of innovation in defender tools, methods, controls, and capabilities

- Lifecycle of major technology solutions (products or products+services)

- What’s the half-life of corporate security policies? How often do policy manuals or training need to be completely redone?

- How long does it take to evaluate, test, and widely deploy some new capability? (e.g. web application security after 2000)

- Rate of innovation in regulations, standards (e.g. PCI-DSS), and other top-down mandates

- How long does it take to design and publish?

- How often are they updated and revised?

- How much forward-looking investigation do they do to anticipate future information security environments or threats?

- Evolution processes in the “Black Hat ecosystem

- Evolution processes information security technology and professional services ecosystem

Of course, this list is extremely broad. I’m all in favor of narrowing down to a particular security domain and ecosystem. Please make suggestions! Pointers to existing empirical reports are most welcome! Please email me privately (russell.thomas A-T meritology D-O-T com) if you are interested in collaborating or contributing in any way. Ideally, I’d like to have a paper ready to submit to WEIS, in Feb. Grad students welcome!

I’d argue that you’ve fallen into a bit of a false dichotomy at such an early stage of the project that you run the risk of missing the genuinely interesting stuff that’s going on in this space.

The model of “big for-profit company is the good guy protecting sensitive data” and “evil black hat ‘hackers’ are the bad guy trying to steal data” might have been useful in, say, 1985. However, to create that false simplicity in the online ecosystem, today, completely ignores the real topological patterns of security innovation, security threats, and forced evolutionary upgrades of mutualistic toolsets.

I’d suggest that starting with a network-centric (in the mathematical sense) model of online ecosystems is a more productive path for your stated security goals. Online, there’s “predators” and “prey” in various niches and sub-niches within the larger network of data connectivity and sharing. Sometimes the “predators” are governments and the “prey” are citizens; sometimes it’s media cartels as predators, and distributed groups of like-minded activists being targeted as prey. Sometimes the tables turn, and Anonymous is stalking Scientology with security-busting tools. And then there’s Wikileaks… bit of a complex variable, there.

It is within this shifting, fluid, dynamic structure that the really accelerated and creative evolution of security exploits and defenses are actually evolving. By the time the new stuff gets dumbed-down to the point where some overgrown helpdesk monkey in a bloated private corporation gets convinced by a slick salesman working for a proprietary software company “selling” limited access to non-source binaries, the actual cutting edge that developed the core innovation in that repackaged tool has long since moved on to newer, better, faster, smarter stuff.

The Platonic example of this is so-called “fast flux DNS” tools. The trajectory of development, through deployment, through expansion, and eventual pick-up by commercial entities is more or less textbook in how it’s played out. However, if you just look for “good guy big companies” and “bad guy scary hackers,” you’ll miss not only the forest for the trees, but also the trees themselves and maybe even the idea of plants in general.

Fausty

Chief Technology Officer

Baneki Privacy Computing

http://www.baneki.com

http://www.cryptocloud.net

http://www.cultureghost.org

Great comments, Fausty.

Yes, the way I worded the description does fall into the “good guys vs. bad guys” mental trap. I hate that, myself, when other folks in the InfoSec community do that. And now I’ve done it myself. D’oh!

But we do have to start somewhere, and starting with a simple set of models and scenarios makes it more tractable and easier to digest. Starting with simple host-parasite models, preditor-prey, and then expanding from there seems like a productive research path. (I have to fight my own urges to add complexity!)

What would be fantastic would be to create a set of models that works for both good guy vs. bad guy scenarios and also for the more fluid/ambiguous/emergent network-centric scenarios.

When I was formulating this research project, I actually had the “network-centric” scenarios in mind, as you describe. I didn’t include them in the description, partly to keep it short. But it’s the network-centric scenarios that are a prime motivation for using methods and tools from computational social sciences rather than relying solely on dynamical system models drawn from standard evolutionary ecology (i.e. systems of ordinary or partial differential equations).

Taking on network-centric scenarios has the added benefit of making our models applicable to a wide range of applications outside of information security, strictly defined. That’s a good thing, because that means more sponsors and “powers that be” will be interested in the results.

I’m glad you brought up the example of “fast flux DNS” as a case example. I’ll dig into that more to see if it would make a good case study for this research.

Thanks again for your insights.

—————-

P.S. While we are discussing network-centric scenarios, I’m also keenly interested in modeling the dynamics of “grey hats” — all the poor users, managers, administrators, etc. who have to implement and use the security technologies and policies while trying to do their job or seeking personal pleasure. They are in their own “arms race” of sorts (or maybe “competitive race” might be better fit) with fast changing information technologies (that they want or try to use), changing threats (that they may or may not understand, or may misperceive), and changing security policies. Regarding security, the utility function of the “grey hats” is very much dominated by avoiding and minimizing their security capabilities and costs, which can lead to rational or irrational non-compliance, over-compliance (“ban everything!”), and also to falling prey to social engineering (e.g. downloading fake AV). Thus their lethargy and under-compliance, on the one hand, or their zealotry and anxiety-driven over-reactions, on the other hand, can cause the “grey hats” to unwittingly aid the “black hats” (how ever they are defined).

As far as I know, no one in the Economics of Information Security community has modelled all these dynamics at the same time, even in the simple attacker-defender frame. Should be fun!