A Black Hat Sneak Preview (Part 2 of ?)

Following up on my previous post, here’s Part 2, “The Factors that Drive Probable Use”. This is the meat of our model. Follow up posts will dig deeper into Parts 1 and 2. At Black Hat we’ll be applying this model to the vulnerabilities that are going to be released at the show.

But before we do our digging, two quick things about this model and post. First, the model is a “knowledge” model (that’s a term Alex just made up, btw to describe the difference between Bayesian and Frequentist approaches to statistical analytics). It actually measures state of knowledge rather than state of nature. That means that this is not a probabilistic model that attempts to try to fill in a number count for some amount of population (how many people expect traditional statistical analysis to work), rather this model measures a degree of belief. Second, the reason we did this was twofold – 1.) establishing accurate information around rate of adoption for what we want to study is difficult, 2.) If we get to the point where we *can* measure rate of adoption in the wild, there’s a proof that a strong knowledge model actually can never be worse than a frequentist model, and that sort of data will only increase the accuracy of the knowledge model results.

Finally, we’re using this model with “exploit code” in this post to describe how much we believe a specific exploit will be used by the aggregate threat community. If you recall the gartner hype cycle graph, it is an attempt to identify and “measure” the factors that drive the curve of “adoption”. It should be noted that this model might work well for not just describing a belief that exploit code will be well adopted, but also that a given weakness (what is commonly referred to as a vulnerability) will have exploit code developed for it. The authors intend to play with this model for both purposes.

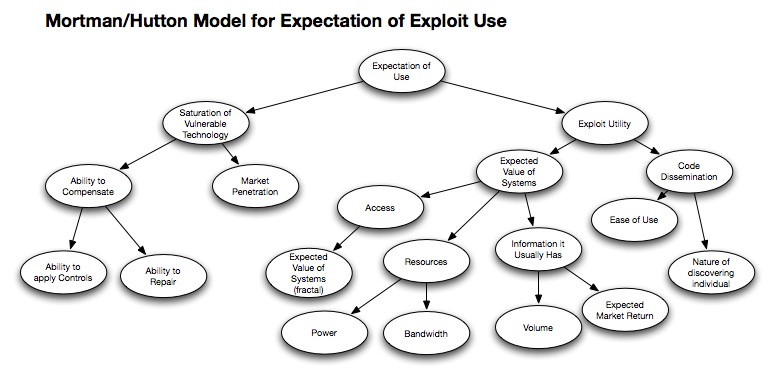

On To The Model: The Mortman/Hutton Model for Expectation of Exploit Use

So here it is:

In this model, essentially, two significant factors begin to determine the fate of the exploit code. First, to understate the simplicity of the real world factors that drive exploit adoption, the exploit must be useful. And in order to be useful, the exploit would need to have valuable systems to penetrate, and people to use it. We break these two factors of usefulness down into two concepts: Saturation of Vulnerable Technology and Exploit Utility.

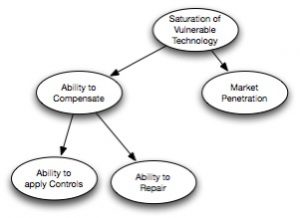

Saturation of Vulnerable Technology (SVT)

If an exploit is going to be developed or used, there actually need to be systems to attack. Saturation of Vulnerable Technology (SVT) reflects that requirement. SVT itself represents the aggregate amount of vulnerable systems, and is driven by two main factors. The number of vulnerable systems is determined by how significant the market penetration is for the technology (Windows = a lot, BeOS = a little), and the ability to compensate for the vulnerability. This ability to compensate is broken down into two sub-elements, the ability to repair (patch the system, for example) and/or the ability to apply compensating controls.

The outcome in SVT is “measured” in a number of probable systems without the ability to compensate. It is a combination of the number of systems with the initial vulnerability, and the probable % of the population that is currently vulnerable.

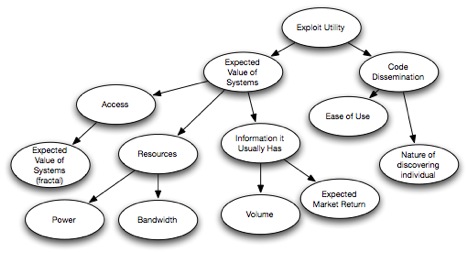

Exploit Utility

If SVT attempts to measure the aggregate attack surface available to the exploit code, then Exploit Utility is an attempt to measure how useful the exploit code is to the aggregate threat community. Exploit Utility is dependent on two factors, if the systems that the exploit will be used on can be expected to return value to the threat community, and how pervasive the exploit code is in the threat community. We call these The Expected Value of Vulnerable Systems (EVoVS) and Probability of Code Dissemination, respectively.

The premise behind the Expected Value of Vulnerable Systems is simply an attempt to suggest that exploit code will only be used if the attacker can expect to gain something from the attack. This value can be expressed in the Information it Offers (in volume and expected market return), the Resources it Offers (in power/space/bandwidth), or the Access it Allows (notably, to other systems). The EVoVS can be measured as a range of value compared to the broader distribution of computing assets available as targets to the broader threat community.

The premise behind the Probability of Code Dissemination is simply an attempt to suggest that the expected amount an exploit is to be used (in aggregate) is a function of how “available” it is. This could simply be stated as a proportion of exploit code inclusion in commonly available toolkit packages. An expected Rate of Exploit Adoption could be said to be dependent on two factors; the ease of use (skills, resources required to use it) and the nature of the exploit author (secretive, non-secretive).

In Conclusion

So there it is. Developed at several different Columbus area coffee shops and lots of chicken wings and beer after work. Now many of us have been privy (or hostage) to discussions around the what and why’s of “some sort of vulnerability/exploit was just announced by research team/vendor”. If anything, hopefully this model will provide a rational basis for coming to a joint conclusion about why we should or shouldn’t care. This model is released under a creative commons attribute-share alike-non-commercial license. That means you have to tell people where you found it, if you mess with it – please give back to the community, and please don’t go charging anyone for the use of it.

I’d be very careful with the “expected value of systems” part — that’s not distinguishing between opportunistic and targeted attacks. Not all attackers may actually know the value of what they’re attacking. You might want to play with the factors to include how much prior knowledge would affect the likelihood of using the exploit. (That’s one thing you have to compensate for when doing risk analysis: your internal knowledge bias can lead you to view targets differently from the way the threat community views them.)

Also, since the VZDBIR report pointed out that the initial “cracking” attack exploit wasn’t necessarily the same one that was used after gaining access to the system — you shouldn’t rank the utility of the exploit based on what you think the final goal will be (in the mind of the attacker). You touched on this with the “access” factor, but my gut feeling is that this should be played with some more. I’m not sure how much “resources” will affect the utility of any given exploit; that would remain constant for every system regardless of the attack being used.

But don’t let my caveats sully what is in truth a wonderful new model … this is a great contribution to risk analysis.

@shrdlu –

As far as your first point is concerned, we’re not looking for a tactical use against a particular asset, this isn’t a real threat/vuln pairing sort of model. It’s rather designed so that some new piece of exploit information can be evaluated for movement along that Hype-cycle/life-cycle graph. So that “value” statement is simply a sort of “Will there be value to an exploit for Oracle databases? Probably. Is there value to an exploit for Android based phones? Not as much.” analysis.

For your second point, again, not tactical. But we needed some way to explain how an exploit against infrastructure hardware is an important consideration. So we might expect a new vulnerability or exploit against, say, Cisco routers to have some greater expectation of adoption in part because they provide access to other more valuable computing assets.

Alex, gotcha, makes sense. I guess it wasn’t clear to me how you were intending the model to be used.

Alex –

Due to heavy bias and experience, I am having a really difficult time getting my mind around this model.

I think it starts with the initial premise that the Gartner Hype Cycle represents anything other than a very general set of observations that are obvious to anyone who has been in the business for a while.

Perhaps we should correspond offline.

Patrick

@Patrick –

Discussing here is good, we may not be clear so thinking it out online might benefit others (see @shrdlu, above).

re: Gartner – this is why we *pre-supposed* the hype-cycle. I’m not sure it represents anything other than our general experience, either. Is this such a bad thing? Does that mean it’s invalid or not useful? I don’t think so. Like you say, it seems that it should be obvious, right? But if we can apply that “curve” to vulnerability discovery and exploit use, then it might make sense to figure out what factors drive that curve. This model is a fun attempt to do so.

@alexhutton & @mortman,

First of all, congrats on the collaboration and getting an opportunity to present at BlackHat. I do not think you have released enough information that I can come to a conclusion on the validity of this model.

This seems to be a hybrid model of CVSS and FAIR taxonomy elements. I am not attending BlackHat, but I know that once I can see this applied in a real world example – it will make more sense.

I am intrigued by the SVT branch of the tree. Specifically, “the ability to compensate” element. Is this in the context of the environment of the company or individual performing the analysis, or the larger population?

Regarding Expected Value of Systems. It seems like “Information It Usually Has” should be a branch off of “Access” – but will defer conclusions until better understanding the model.

Looks like you guys are off to a great start!

@Chris

LOL, I could see how you would call this a combination of CVSS and FAIR like stuff. But honestly, it’s not like we had those documents out at the restaurant when we did this on the back of a napkin.

RE: SVT – this is all about the larger population. We’re making generalizations here.

RE: IiUH – the thought process there was/is that for an exploit or vulnerability to gain “popularity” (i.e. be driven up along the Gartner adoption curve) two things were necessary – the systems it lead to privileges on would need to represent some value to an attacker. We tried to express this value in three ways, it can have information of value, it can have computing power of value, or it can lead to access to other systems that have one (or both, I suppose) of those elements.

There is one more post coming up. And that post will describe what we intend to do with this maintenance for this model going forward. As you know, most models are living things. They represent theories that describe how we think the world works, and this one is no different. In fact, I’m not comfortable with the “information it usually has” branch (there’s no good accounting for braggadocio sort of attacks here) and there’s something not quite right about the code dissemination branch to me. But we all have full-time jobs, right?

@alexhutton – To be fair (no pun intended) to the rest of the readers, my comment re: FAIR and CVSS was more in the context of the taxonomy elements – how they are named and organized. The intent and focus of the model being proposed is much different then the intent of FAIR and CVSS. I should have provided more context. I look forward to reading more information – bring it on.