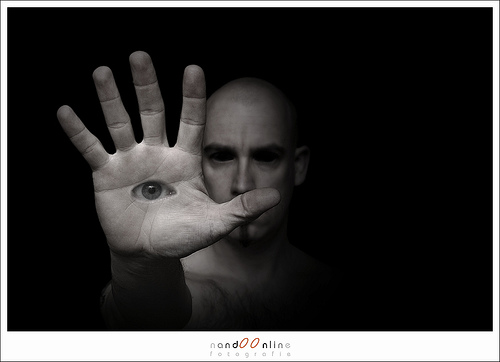

In the land of the blind...

Jeff Lowder takes PCI to the New School in “PCI DSS Position on Patching May Be Unjustified:”

Verizon Business recently posted an excellent article on their blog about security patching. As someone who just read The New School of Information Security (an important book that all information security professionals should read), I thought it was refreshing to see someone take an evidence-based approach to information security controls.

First, thanks Jeff! Second, I was excited by the Verizon report precisely because of what’s now starting to happen. I wrote “Verizon has just catapulted themselves into position as a player who can shape security. That’s because of their willingness to provide data.” Jeff is now using that data to test the PCI standard, and finds that some of its best practices don’t make as much sense the authors of PCI-DSS might have thought.

That’s the good. Verizon gets credibility because Jeff relies on their numbers to make a point. And in this case, I think that Jeff is spot on.

I did want to address something else relating to patching in the Verizon report. Russ Cooper wrote in “Patching Conundrum” [link to http://securityblog.verizonbusiness.com/2008/06/13/patching-conundrum/ no longer works] on the Verizon Security Blog:

To summarize the findings in our “Control Effectiveness Study”, companies who did a great job of patching (or AV updates) did not have statistically significant less hacking or malicious code experience than companies who said they did an average job of patching or AV updates.

The trouble with this is that the assessment of patching is done by

…[interviewing] the key person responsible for internal security (CSO) in just over 300 companies for which we had already established a multi-year data breach and malcode history. We asked the CSO to rate how well each of dozens of countermeasures were actually deployed in his or her enterprise on a 0 to 5 scale. A score of “zero” meant that the countermeasure was not in use. A score of “5″ meant that the countermeasure was deployed and managed “the best that the CSO could imagine it being deployed in any similar company in the world.” A score of “3″ represented what the CSO considered an average deployment of that particular countermeasure.

So let’s take two CSOs, analytical Alice and boastful Bob. Analytical Alice thinks that her patching program is pretty good. Her organization has strong inventory management, good change control, and rolls out patches well. She listens carefully, and most of her counterparts say similar things. So she gives herself a “3.” Boastful Bob, meanwhile, has exactly the same program in place, but thinks a lot about how hard he’s worked to get those things in place. He can’t imagine anyone having a better process ‘in the real world,’ and so gives himself a 5.

[Update 2: I want to clarify that I didn’t mean that Alice and Bob were unaware of their own state, but that they lack data about the state of many other organizations. Without that data, it’s hard for them to place themselves comparatively.]

This phenomenon doesn’t just impact CSOs. There’s fairly famous research entitled “Unskilled and Unaware of it,” [link to http://www.apa.org/journals/features/psp7761121.pdf no longer works] or “Why the Unskilled Are Unaware:”

Five studies demonstrated that poor performers lack insight into their shortcomings even in real world settings and when given incentives to be accurate. An additional meta-analysis showed that it was lack of insight into their errors (and not mistaken assessments of their peers) that led to overly optimistic social comparison estimates among poor performers.

Now, the Verizon study could have overcome this by carefully defining what a 1-5 meant for patching. Did it? We don’t actually know. To be perfectly fair, there’s not enough information in the report to make a call on that. I hope that they’ll make that more clear in the future.

Candidly, though, I don’t want to get wrapped around the axle on this question. The Verizon study (as Jeff Lowder points out) gives us enough data to take on questions which have been opaque. That’s a huge step forward, and in the land of the blind, it’s impressive what a one-eyed man can accomplish. I’m hopeful that as they’ve opened up, we’ll have more and more data, more critiques of that data. It’s how science advances, and despite some mis-givings about the report, I’m really excited by what it allows us to see.

Photo: “In the land of the blind, the one eyed are king” by nandOOnline, and thanks to Arthur for finding it.

[Updated: cleaned up the transition between the halves of the post.]

Sounds like one could as well make the argument that CSO’s who are happy with their patching programs don’t have a better security outcome than CSO’s who feel their programs could be improved. The lesson has nothing to do with patching and everything to do with CSO attitudes, which is what Verizon measured.

Yes, very annoying that the “data” is really just a measure of self-perception.

I have run numerous engagements where the perception of executives is so far removed from the reality of security, that the PCI DSS is a welcome breath of fresh air to the conversation.

Patching not only makes a lot of sense, but the PCI incident response/investigation teams obviously ferret out the root cause (pun not intended) on their own. When breaches are no longer due to missing patches then they will surely update the PCI DSS appropriately.

I was under the impression that there were two separate sets of data at play here. One was the 1 through 5 rating, but Jeff’s point was in regards to the length of time that a patch was available prior to exploit for the incidents in question, wasn’t it?

Adam,

First, thanks for the kind words about our study. It is definitely our intention to make more data available, and on a more regular basis.

With regard to our measuring CSO response,I think CSO’s are being done a disservice by typifying them as not knowing, in adequate detail, their patching solutions. In our experience, they are not so far removed from the front lines that they are as subjective as is being suggested in your post. Further, our contact wasn’t always, or only, CSOs. We asked to speak to the “key person responsible???, and in most cases we felt we did.

As for defining 1 – 5 differently, we don’t think it would have necessarily been any better. We have consistently found that key responsible people often think they’ve achieved 100% deployment when, in fact, they haven’t. Consider both the “unknown unknowns??? and “Error??? sections of the study as explanations of why they think they’ve achieved 100%. Knowing whether you’re “truly??? a 1 or a 5 will always be subjective, even if it’s based on reports your patching solution spit out for you. You can’t blame a patching solution for telling you 100% deployed if it is unaware of the 6 computers over there that no one knew existed.

Further, consider that we have three separate studies coming to the same conclusion. The “Countermeasures Effectiveness Study??? you referenced is but one. In each of the three we found similar results despite asking the question in three different ways (via the different surveys or analysis of the investigator.) The “Sasser Study,??? also referenced in my article, asked what percentage of systems were patched before the outbreak. The “Data Breach Investigations Report??? used forensics for its determination.

As for more information about the “Countermeasures Effectiveness Study??? itself, I’d invite you to examine it in more detail via the IEEE Security & Privacy published article: “Is Information Security Under Control???? This article can be found in the January/February 2007 edition, written by Wade Baker and Linda Wallace. Wade was also a co-author of the Data Breach Investigations Report, and is a member of the Verizon Business RISK Team. The Survey Methodology is more fully explained there.

Our goal, however, wasn’t to be the most precise in terms of comparing reality to what a CSO thinks, but to demonstrate that the perceived effectiveness of patching may be misplaced, or not even clearly understood. If you think that AV alone is going to solve your malware problem, then you’ve misplaced your trust. If you think that a Firewall is all that you need to prevent intrusions, think again. If you think that purchasing a “patching solution??? is better than implementing relatively simple controls (that don’t require a new product); you’re overlooking a great chance to reduce risk and save money.

Cheers,

Russ Cooper

Hi Russ,

Thanks for your comments, and I’ll follow up more after I’ve looked at the additional data. I did want to clarify that I’m not claiming the CSOs were unaware of their own state, but of the state of others. I’ve added update 2 to explain that.