The Security Principles of Saltzer and Schroeder

Introduction and Context

Since writing this nearly twenty years ago and teaching thousands of developers about threat modeling and security design, my views on design principles have evolved. By 2023, I’ve learned that design principles are harder to learn and apply than threat modeling techniques. It’s not that the principles are bad — I think some have stood the test of time — but I’ve seen that applying principles in design analysis requires careful analysis, abstraction, and the ability to conceive and compare a set of designs. It’s both a more nuanced skillset, and the results are harder to assess.

If you ask two experts to apply the principles, you may get very different results. For example, is “Run as a normal user, not Administrator” a good application of Least Privilege? Does it require running as a sandboxed “Modern” app? More segmentation? The choice about how far to take a principle makes it both harder to teach and harder to apply.

- If you want to learn more about threat modeling or our training offerings, there are links above, or

- you can read on to learn about Saltzer and Schroeder’s classic security principles, as illustrated by Star Wars.

The Security Principles of Saltzer and Schroeder, as illustrated with Star Wars

Let me start by explaining who Saltzer and Schroeder are, and why I keep referring to them. Back when I was a baby, Jerome Saltzer and Michael Schoeder wrote a paper The Protection of Information in Computer Systems. That paper has been referred to as one of the most cited, least read works in computer security history. And look! I’m citing it, never having read it.

If you want to read it, the PDF version (484k) may be a good choice for printing. The bit that everyone knows about is the eight principles of design that they put forth. And it is these that I'll illustrate using Star Wars. Because lets face it, illustrating statements like “This kind of arrangement is accomplished by providing, at the higher level, a list-oriented guard whose only purpose is to hand out temporary tickets which the lower level (ticket-oriented) guards will honor” using Star Wars is a tricky proposition. (I'd use the escape from the Millennium Falcon with Storm Trooper uniforms as tickets as a starting point, but it’s a bit of a stretch.)

These sections below originally appeared as blog posts over two months from October through December of 2005. They are presented in essentially their original form with minor edits for readability.

Economy Of Mechanism

Keep the design as simple and small as possible.This well-known principle applies to any aspect of a system, but it deserves emphasis for protection mechanisms for this reason: design and implementation errors that result in unwanted access paths will not be noticed during normal use (since normal use usually does not include attempts to exercise improper access paths). As a result, techniques such as line-by-line inspection of software and physical examination of hardware that implements protection mechanisms are necessary. For such techniques to be successful, a small and simple design is essential.

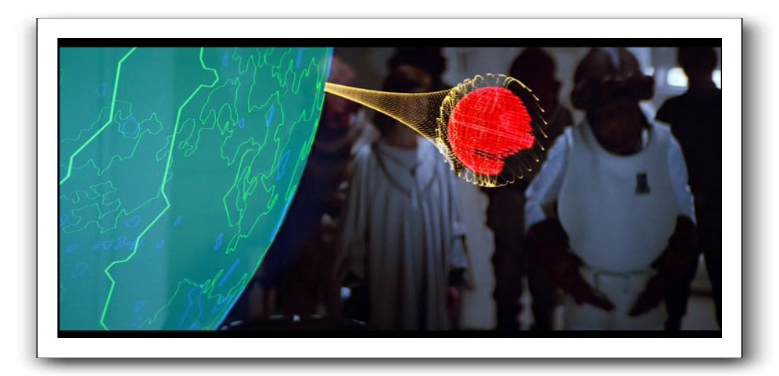

And so lets look at the energy shield which protects the new Death Star. It is, as General Akbar tells us, projected from a base on the nearby forest moon of Endor. And as you may recall, there were not only extra access paths which required reinforcement, but additional threats which hadn't been considered.

Firstly, why is it on the forest moon at all? Presuming that energy shields follow some sort of power-absorption law, the closer the shield is, the less power it will draw. But more importantly, being on the moon means that it is surrounded by forest, rather than cold, hard vacuum. The shield generator becomes harder to protect, meaning that additional protection mechanisms, each of which can fail, are needed.

Presumably, the Empire has power generation technology which drives the Death Star, and also the Star Destroyers. There's no need to rely on a ground-based station. The ideal placement for the energy shield is inside the Death Star, and traveling with it.

But instead, there's this bizarre and baroque arrangement. It probably comes from a fight between the Generals and the Admirals. The Generals wanted a bit of the construction process, and this was the bureaucratic bone thrown to them.

Expensive it was. mmm?

(Original post with comments: “Star Wars: Economy Of Mechanism”)

Fail-safe Defaults

Next, we look at the principle of fail-safe defaults:

Fail-safe defaults: Base access decisions on permission rather than exclusion. This principle, suggested by E. Glaser in 1965 means that the default situation is lack of access, and the protection scheme identifies conditions under which access is permitted. The alternative, in which mechanisms attempt to identify conditions under which access should be refused, presents the wrong psychological base for secure system design. A conservative design must be based on arguments why objects should be accessible, rather than why they should not. In a large system some objects will be inadequately considered, so a default of lack of permission is safer. A design or implementation mistake in a mechanism that gives explicit permission tends to fail by refusing permission, a safe situation, since it will be quickly detected. On the other hand, a design or implementation mistake in a mechanism that explicitly excludes access tends to fail by allowing access, a failure which may go unnoticed in normal use. This principle applies both to the outward appearance of the protection mechanism and to its underlying implementation.

I should note in passing that a meticulous and careful blogger would have looked through the list and decided on eight illustrative episodes before announcing the project. Because, on reflection, Star Wars contains few scenes which do a good job of illustrating this principle. And so, after watching A New Hope again, I settled on:

OFFICER: Where are you taking this... thing?

LUKE: Prisoner transfer from Block one-one-three-eight.

OFFICER: I wasn't notified. I'll have to clear it.

HAN: Look out! He's loose!

LUKE: He's going to pull us all apart.

Now this officer knows nothing about a prisoner transfer from cell block 1138. (Incidentally, I'd like to know where the secret and not-yet-operational Death Star is getting enough prisoners that they need to be transferred around?) Rather than simply accepting the prisoner, he attempts to validate the action.

You might think that it's a cell block–how bad can it be to have the wrong wookie in jail? It's not like (after Episode 3) there's much of the WCLU left around to complain, and the Empire is ok with abducting and killing the Royal Princess Leia, so a prisoner in the wrong cell is probably not a big deal, right? Wrong.

As our diligent officer knows, “A conservative design must be based on arguments why objects should be accessible, rather than why they should not.” The good design of the cell block leads to a “fail[ure] by refusing permission, a safe situation, since it will be quickly detected.” Now, Luke and Han also know this, and the officer happens to die for his care. Nevertheless, the design principle serves its purpose: The unauthorized intrusion is detected, and even though Luke and Han are able to enter the cell bay, they can't get out, because other responses have kicked in.

Had the cell block operations manual been written differently, the ruse might have worked. Prisoner in manacles? Check! (Double-check: they don't quite fit.) Accompanied by Storm Troopers? Check! (Double-check: one's a bit short.) The process of validating that something is ok is more likely to fail safely, and that's a good thing. Thus the principle of fail-safe defaults.

(Original post with comments: Friday Star Wars: Principle of Fail-safe Defaults)

Complete Mediation

Complete MediationEvery access to every object must be checked for authority. This principle, when systematically applied, is the primary underpinning of the protection system. It forces a system-wide view of access control, which in addition to normal operation includes initialization, recovery, shutdown, and maintenance. It implies that a foolproof method of identifying the source of every request must be devised. It also requires that proposals to gain performance by remembering the result of an authority check be examined skeptically. If a change in authority occurs, such remembered results must be systematically updated.

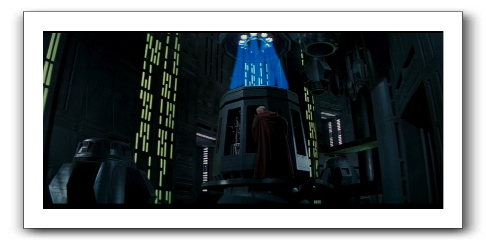

The key bit here is that every object is protected, not an amalgamation. So, for example, if you were to have a tractor beam controller pedestal in an out of the way air shaft, you might have a door in front of it, with some access control mechanisms. Maybe even a guard. I guess the guard budget got eaten up with the huge glowing blue lightning indicator. Maybe next time, they should have a status light on the bridge. But I digress.

The tractor beam controls were insufficiently mediated. There should have been guards, doors, and a response system. Such protections would have been a “primary underpinning of the protection system.”

But that was easy. Too easy, if you ask me. In start contrast to last week's post, “Friday Star Wars: Principle of Fail-safe Defaults,” which, as certain ungrateful readers (yes, you, Mr. assistant to... ) certain ungrateful readers did have the temerity to point out that we have high standards here, and so we offer up a second example of insufficient mediation.

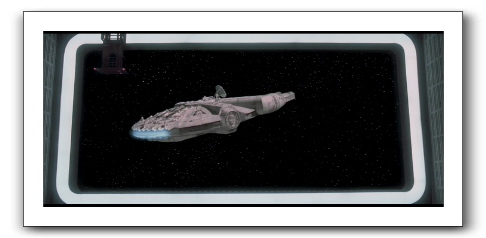

After they get back to the ship, there's nothing else to do. They simply fly away. The bay is open and the Falcon can fly out. Where's the access control? (This is another example of why firewalls need to be bi-directional.) Is it an automated safety that anything can just fly out of a docking bay? Seems a poor safety to me.

Once again, a poor security decision, the lack of complete mediation, aids those rebel scum in getting away. Now, maybe someone decided to let them go, but still, they should have had to press one more button.

(Original post with comments: Friday Star Wars and the Principle of Complete Mediation)

Least Privilege

Least Privilege: Every program and every user of the system should operate using the least set of privileges necessary to complete the job. Primarily, this principle limits the damage that can result from an accident or error. It also reduces the number of potential interactions among privileged programs to the minimum for correct operation, so that unintentional, unwanted, or improper uses of privilege are less likely to occur. Thus, if a question arises related to misuse of a privilege, the number of programs that must be audited is minimized. Put another way, if a mechanism can provide “firewalls,” the principle of least privilege provides a rationale for where to install the firewalls. The military security rule of “need-to-know” is an example of this principle.

In a previous post, I was having trouble choosing a scene to use. So I wrote to several people, asking for advice. One of those people was Jeff Moss, who has kindly given me permission to use his answer as the core of this week's post:

How about when on the Death Star, when R2D2 could not remotely deactivate the tractor beam over DeathNet(tm), Obi Wan had to go in person to do the job. This ultimately lead to his detection by Darth Vader, and his death. Had R2D2 been able to hack the SCADA control for the tractor beam he would have lived. Unfortunately the designers of DeathNet employed the concept of least privilege, and forced Obi Wan to his demise.

Initially, I wanted to argue with Jeff about this. An actual least privilege system, I thought, would not have allowed R2 to see the complete plans and discover where the tractor beam controls are. But R2 is just playing with us. He already has the complete technical readouts of the Death Star inside him. He doesn't really need to plug in at all, except to get an orientation and a monitor to display Obi Wan's route.

But even if R2 didn't have complete plans, note the requirement to have the privileges “necessary to complete the job.” Its not clear if you could operate a battle station without having technical plans widely available for maintenance and repair. Which is a theme I'll return to as the series winds to its end.

(Original post with comments: Star Wars and the Principle of Least Privilege)

Least Common Mechanism

Least Common Mechanism: Minimize the amount of mechanism common to more than one user and depended on by all users [28]. Every shared mechanism (especially one involving shared variables) represents a potential information path between users and must be designed with great care to be sure it does not unintentionally compromise security. Further, any mechanism serving all users must be certified to the satisfaction of every user, a job presumably harder than satisfying only one or a few users. For example, given the choice of implementing a new function as a supervisor procedure shared by all users or as a library procedure that can be handled as though it were the user's own, choose the latter course. Then, if one or a few users are not satisfied with the level of certification of the function, they can provide a substitute or not use it at all. Either way, they can avoid being harmed by a mistake in it.

The reasons behind the principle are a little less obvious this week. The goal of Least Common Mechanism (LCM) is to manage both bugs and cost. Most useful computer systems come with large libraries of sharable code to help programmers and users with commonly requested functions. (What those libraries entail has grown dramatically over the years.) These libraries are collections of code, and code that has to be written and debugged by someone.

Writing secure code is hard and expensive. Writing code that can effectively defend itself is a challenge, and if the system is full of large libraries that run with privileges, then some of those libraries will have bugs that expose those privileges.

So the goal of LCM is to constrain risks and costs by concentrating the security critical bits in as effective a fashion as possible. Which, if you recall that the best defense is a good offense, leads us to this week's illustration:

This is, of course, the ion cannon on Hoth destroying an Imperial Star Destroyer, and allowing a transport ship to get away. There is only one ion cannon (they're apparently expensive). It's a common mechanism designed to be acceptable to all the reliant ships.

That's about the best we can do. Star Wars doesn't contain a great example of minimizing common mechanism in the way that Saltzer and Schroeder mean it. Also hard to find good examples of is separation of privilege. Unless someone offers up a good example, I'll skip it, and head right to open design and psychological acceptability, both of which I'm quite excited about. They'll make find ends to the series.

(Original post with comments: Star Wars and Least Common Mechanism)

Separation of Privilege

Separation of privilege: Where feasible, a protection mechanism that requires two keys to unlock it is more robust and flexible than one that allows access to the presenter of only a single key. The relevance of this observation to computer systems was pointed out by R. Needham in 1973. The reason is that, once the mechanism is locked, the two keys can be physically separated and distinct programs, organizations, or individuals made responsible for them. From then on, no single accident, deception, or breach of trust is sufficient to compromise the protected information. This principle is often used in bank safe-deposit boxes. It is also at work in the defense system that fires a nuclear weapon only if two different people both give the correct command. In a computer system, separated keys apply to any situation in which two or more conditions must be met before access should be permitted. For example, systems providing user-extendible protected data types usually depend on separation of privilege for their implementation.

This principle is hard to find examples of in the three Star Wars movies. There are lots of illustrations of delegation of powers, but few of requiring multiple independent actions. Perhaps the epic nature of the movies and the need for heroism is at odds with separation of privileges. I think there's a strong case to be made that heroic efforts in computer security are usually the result of important failures, and the need to clean up. Nevertheless, I committed to a series, and I'm pleased to be able to illustrate all eight principles.

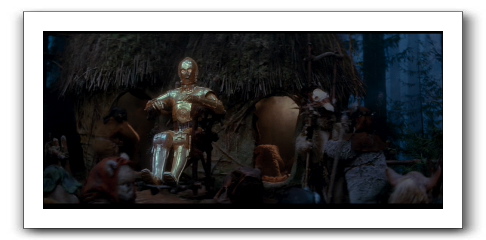

This week, we turn our attention to the Ewoks capturing our heroes. When C3-P0 first tries to get them freed, despite being a god, he has to negotiate with the tribal chief. This is good security. 3P0 is insufficiently powerful to cancel dinner on his own, and the spit-roasting plan proceeds. From a feeding the tribe perspective, the separation of privileges is working great. Gods are tending to spiritual matters and holidays, and the chief is making sure everyone is fed.

It is only with the addition of Luke's use of the force that it becomes clear that C3-PO is all-powerful, and must be obeyed. While convenient for our heroes, a great many Ewoks die as a result. It's a poor security choice for the tribe.

Last week, Nikita Borisov, in a comment, called “Least Common Mechanism” the ‘Least Intuitive Principle.' I think he's probably right, and I'll nominate Separation of Privilege as most ignored. Over thirty years after its publication, every major operating system still contains a “root,” “administrator” or “DBA” account which is all-powerful, and nearly always targeted by attackers. It's very hard to design computer systems in accordance with this principle, and have them be usable.

(Original post with comments: Star Wars and Separation of Privilege)

Open Design

This principle and the next are the two which inspired me to use Star Wars to illustrate Saltzer and Schroeder's design principles.

Open design: The design should not be secret. The mechanisms should not depend on the ignorance of potential attackers, but rather on the possession of specific, more easily protected, keys or passwords. This decoupling of protection mechanisms from protection keys permits the mechanisms to be examined by many reviewers without concern that the review may itself compromise the safeguards. In addition, any skeptical user may be allowed to convince himself that the system he is about to use is adequate for his purpose. Finally, it is simply not realistic to attempt to maintain secrecy for any system which receives wide distribution.

The opening sentence of this principle is widely and loudly contested. The Gordian knot has, I think, been effectively sliced by Peter Swire, in A Model For When Disclosure Helps Security.

In truth, the knot was based on poor understandings of Kerckhoff. In La Cryptographie Militare Kerckhoff explains that the essence of military cryptography is that the security of the system must not rely on the secrety of anything which is not easily changed. Poor understandings of Kerckhoff abound. For example, my “Where is that Shuttle Going?” claims that “An attacker who learns the key learns nothing that helps them break any message encrypted with a different key. That's the essence of Kerkhoff's principle: that systems should be designed that way.” That's a great mis-statement.

In a classical castle, things which are easy to change are things like the frequency with which patrols go out, or the routes which they take. Harder to change is the location of the walls, or where your water comes from. So your security should not depend on your walls or water source being secret. Over time, those secrets will leak out. When they do, they're hard to alter, even if you know they've leaked out.

Now, I promised in “Star Wars and the Principle of Least Privilege” to return to R2's copy of the plans for the Death Star, and today, I shall. Because R2's copy of the plans–which are not easily changed–ultimately lead to today's illustration:

The overall plans of the Death Star are hard to change. That's not to say that they should be published, but the security of the Death Star should not rely on them remaining secret. Further, when the rebels attack with stub fighters, the flaw is easily found:

OFFICER: We've analyzed their attack, sir, and there is a danger.

Should I have your ship standing by?TARKIN: Evacuate? In our moment of triumph? I think you overestimate

their chances!

Good call, Grand Moff! Really, though, this is the same call that management makes day in and day out when technical people tell them there is a danger. Usually, the danger turns out to go unexploited. Further, our officer has provided the world's worst risk assessment. “There is a danger.” Really? Well! Thank you for educating us. Perhaps next time, you could explain probabilities and impacts? (Oh. Wait. To coin a phrase, you have failed us for the last time.) The assessment also takes less than 30 minutes. Maybe the Empire should have invested a little more in up-front design analysis. It's also important to understand that attacks only get better and easier as time goes on. As researchers do a better and better job of sharing their learning, the attacks get more and more clever, and the un-exploitable becomes exploitable. (Thanks to SC for that link.)

Had the Death Star been designed with an expectation that the plans would leak, someone might have taken that half-hour earlier in the process, when it could have made a difference.

(Original post with comments: Open Design)

Psychological Acceptability

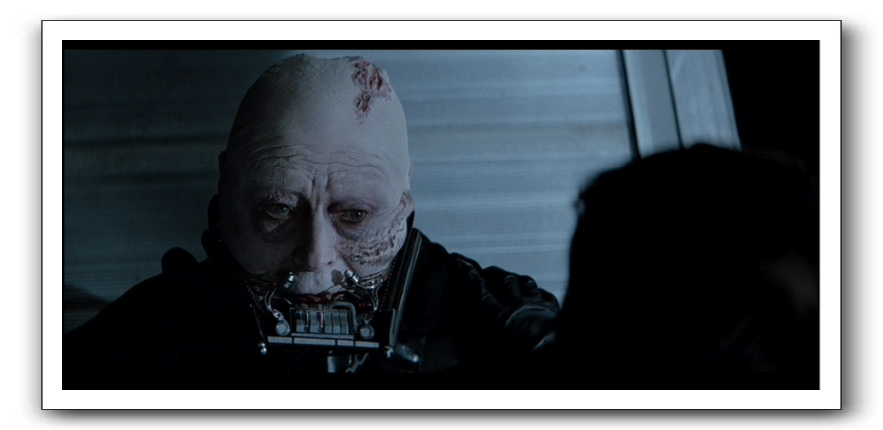

This week's Friday Star Wars Security Blogging closes the design principles series. (More on that in the first post of the series, “Economy of Mechanism.”) We close with the principle of psychological acceptability. We do so through the story that ties the six movies together: The fall and redemption of Anakin Skywalker.

There are four key moments in this story. There are other important moments, but none of them are essential to the core story of failure and redemption. Those four key moments are the death of Anakin's mother Shmi, the decision to go to the dark side to save Padme, Vader's revelation that he is Luke's father, and his attempts to turn Luke, and Anakin's killing Darth Sideous.

The first two involve Anakin's failure to save the ones he loves. He becomes bitter and angry. That anger leads him to the dark side. He spends twenty years as the agent of Darth Sideous, as his children grow up. Having started his career by murdering Jedi children, we can only assume that those twenty years involved all manner of evil. Even then, there are limits past which he will not go.

The final straw that allows Anakin to break the Emperor's grip is the command to kill his son. It is simply unacceptable. It goes so far beyond the pale that the small amount of good left in Anakin comes out. He slays his former master, and pays the ultimate price.

Most issues in security do not involve choices that are quite so weighty, but all have to be weighed against the psychological acceptability test. What is acceptable varies greatly across people. Some refuse to pee in a jar. Others decline to undergo background checks. Still others cry out for more intrusive measures at airports. Some own guns to defend themselves, others feel having a gun puts them at greater risk. Some call for the use of wiretaps without oversight, reassured that someone is doing something, while others oppose it, recalling past abuses.

Issues of psychological acceptability are hard to grapple with, especially when you've spent a day immersed in code or network traces. They're “soft and fuzzy.” They involve people who haven't made up their minds, or have nuanced opinions. It can be easier to declare that everyone must have eight character passwords with mixed case, numbers, and special characters. That your policy has been approved by executives. That anyone found in non-compliance will be fired. That you have monitoring tools that will tell you that. (Sound familiar?) The practical difficulties get swept under the rug. The failures of the systems are declared to be a price we all must pay. In the passive voice, usually. Because even those making the decisions know that they are, on a very real level, unacceptable, and that credit and its evil twin of accountability, is to be avoided.

Most of the time, people will meekly accept the bizarre and twisted rules. They will also resent them, and believe that small ways of getting back, rather than throwing their former boss into a reactor core. The story, so much in the news about NSA wiretapping, is in the news today because NSA officials have been strongly indoctrinated that spying on Americans is wrong. There's thirty years of culture, created by the Foreign Intelligence Surveillance Act, that you don't spy on Americans without a court order. They were ordered to discard that. It was psychologically unacceptable.

A powerful principle, indeed.

(Original post with comments: Psychological Acceptability)

(If you enjoyed these posts, you can read the others in the “Star Wars” category archive, and heck, maybe even my book, Threats: What Every Engineer Should Learn from Star Wars)