Threat Modeling Crypto Back Doors

Today, the Open Technology Institute released an open letter to the President of the United States from a broad set of organizations and experts, and I’m pleased to be a signer, and agree wholeheartedly with the text of the letter. (Some press coverage.)

I did want to pile on with an excerpt from chapter 9 of Threat Modeling: Designing for Security:

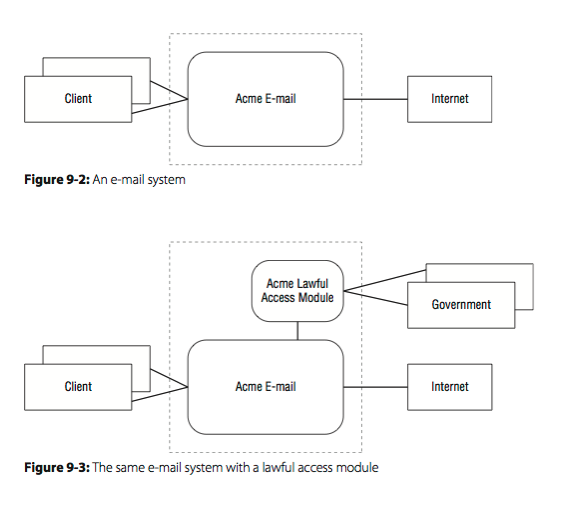

For another example of comparative threat modeling, consider the two systems shown in Figures 9-2 and 9-3. Figure 9-2 depicts an e-mail system, and Figure 9-3 is a version of 9-2 with a “lawful intercept” module added. (“Lawful intercept” is an Orwellian phrase for “thing which allows people to bypass the security features of your system.” Setting aside any arguments of “should we as a society have such a mechanism?” it’s possible to assess the technical security implications of adding such mechanisms.)

It should be obvious that Figure 9-2 is more secure than Figure 9-3. Using software-centric modeling, Figure 9-3 adds two data flows and a process; thus, by STRIDE-per-element, it has an additional 12 threats (tampering, information disclosure, DoS with each flow, for 6; and the six S,T,R, I, D, and E threats against the process for a total of 12). Additionally, Figure 9-3 has two apparent groupings of elevation-of-privilege threats: those posed by outsiders and those posed by software-allowed, but human-policy-violating, use. Thus, if Figure 9-2 has a list of threats (1…n), then Figure 9-3 has a list of threats (1…n+14).

If instead of software-centric modeling you use attacker-centered modeling on the systems shown in Figures 9-2 and 9-3, you find two sets of threats: First, each law enforcement agency that is authorized to connect adds its employees and IT systems as possible threats, and possible threat vectors. Second, attackers are likely to attack these features of the system to abuse them. The 2010 “Aurora” attacks on Google and others allegedly did exactly this (McMillan, 2010, and Adida, 2013). Thus, by comparing them you can see that the addition of these features creates additional risk. You might also wonder where those risks fall, but that’s outside the scope of this example.

More subtly, the addition of the code in Figure 9-3 is an obvious source of security vulnerabilities. As such, it may draw attention and possibly effort away from the rest of the system. Thus, the components that comprise Figure 9-2 are likely to be less secure, even ignoring the threats to the additional components. In the same vein, the requests and implementations for such back- doors may be confidential or classified. If that’s the case, the features may not go through normal tracking for implementation, testing, or review, again reducing the odds that they are secure. Of course, because such a system is designed to bypass other security controls, any weaknesses are likely to have outsized impact.

The technical arguments are simple. All other things being equal, systems with backdoors are unavoidably less secure, and probably worse than that. American companies cannot be competitive if the government forces us to add them.

[Update, July 7, 2015: A group of experts has released a longer paper, “Keys Under The Doormat.” Blogs from Ross Anderson and Steve Bellovin give additional perspective.]