SHB Session 7: Privacy

Tyler Moore chaired the privacy session.

Alessandro Acquisti, CMU. (Suggested reading: What Can Behavioral Economics Teach Us About Privacy?; Privacy in Electronic Commerce and the Economics of Immediate Gratification.) It’s not that people act irrationally, it’s that we need deeper models of their privacy choices. Illusion of control, over-confidence, in privacy people seek ambiguity, people become more privacy-protecting after confidentiality notices. 2 experiments: First on herding effects. Asked 6 intrusive questions about behavior (eg, have you ever had sex with the current husband, wife or partner of a friend. After answering each question, presented ostensible distribution of answers. In fact, manipulated results. Answers were “at least once,” “never,” or “refused to answer.” Will answers trigger higher admission rates? Cumulative admission rates went way up as people are shown that others are admitting to sensitive behavior. (Is there a ‘bragging’ effect?) Second study: do privacy intrusions sensitize or de-sensitze people? Similar questions, went tame to intrusive, or intrusive to tame. “Frog” hypotheses rejected, coherent arbitrariness accepted.

Adam Joinson, Bath: (Suggested reading: Privacy, Trust and Self-Disclosure Online [link to http://people.bath.ac.uk/aj266/pubs_pdf/joinson_et_al_HCI_final.pdf no longer works]; Privacy concerns and privacy actions [link to http://people.bath.ac.uk/aj266/pubs_pdf/ijhcs.pdf no longer works] ) Experimental psychologists. Looking at expressive privacy, the need to communicate. Allows us to connect to other people. (DeCew, 1997). Business case for expressive privacy. Social network design goal of bringing people from discovery to superficial environment to true commitment. Looked at 426 Facebook users in UK, US, Greece, France, Italy. Uses of site varied between countries for photos, status updates, social investigation, social connections. Big differences in trust of Facebook itself. US and UK users made privacy settings closed. Italians open profiles and also join lots of groups. Trust in Facebook is linked much more than trust in other users. More friends, less trust in peers. Lower trust leads to less use of site. Second study: looked at tweets. Used SecretTweet [link to http://secrettweet.com/ no longer works] versus Twitter. Clear linguistic markers: personal pronouns, secual words, past tense. More exclamation marks in normal tweets. Checked if # of followers influences tweets. Does audience size matter? Two groups: Less than 100, more than 200. No difference. Value creation depends on expressive privacy. If you don’t provide privacy, you become banal.

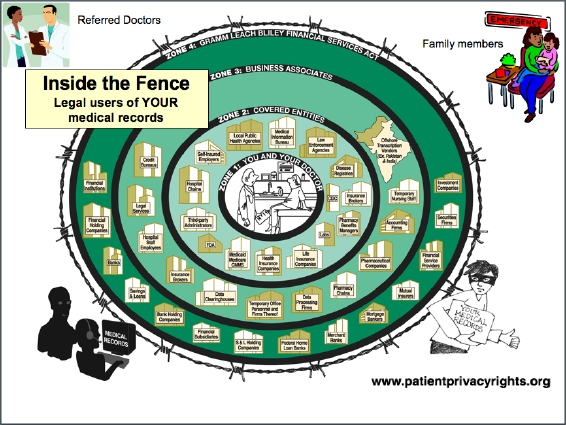

Peter Neumann, SRI. (Suggested reading: Holistic systems; Risks; Identity and Trust in Context.) Holistic view. 3 topics: health care, voting and Chinese. Has medical privacy chart [link to http://www.patientprivacyrights.org/site/PageServer?pagename=Who_Can_See_Your_Medical_Records no longer works] that covers 4 million people who can see medical information about you:

Continuing with Peter Neumann: Claims Andrew is incorrect: we might be able to build secure voting machines, but can’t build secure voting systems. If we want privacy of votes, need reliability. Only blind people were using DRE machines with paper trail in California, and paper trail does them no good.

Moves on to Green Dam software. Everyone selling computers in China will have their software on computers. Chinese may be installing all sorts of trap doors. Any would be solution to all three problems has to look at the pervasive nature of threats. Design requires understanding of privacy threats and addressing them early. Ordinary people have no idea of the depth of the problem. Bottom line: I can’t trust anybody to build a perfect system.

Eric Johnson, Dartmouth. (Suggested reading: Access Flexibility with Escalation and Audit; Security through Information Risk Management.) Working on a problem that many people consider solved. Information access. Users request access, owner approved, systems are administered. But CIOs say access is failing in their organizations. People don’t have the info they need; others have far too much. Did field studies in banks (investment and retail). In one bank, 22,000 employees for 11,000 roles. One bank (in great shape) more roles than employees. Sit the managers down is not a realistic perspective. How to manage, how to understand & visualize, role drift. Origins of complexity: 1000s of applications, 100s of entitlements, 10Ks of users. In a perfect world, we could match permissions to requirements. Many orgs use the “get the job done” approach. Copy & paste between employees. Result: over-entitlement: estimated 50-90% of employees were over-entitled. Dynamic org structure makes it difficult to de-provision. Can track employees tenure based on entitlement sets. Can we use incentives to control? In medical space, standard practice is break the glass. (Image of “Hospital workers fired for looking at Spear’s records.”) Firing makes people risk averse.

Christine Jolls, Yale. (Suggested reading Rationality and Consent in Privacy Law; Emoployee Privacy). Law professor with economic training. Argues that (lawyer jokes aside) law can help privacy. People share information with their intimates. Recent research into human happiness. Most robust predictor of happiness is rich social interaction. An important aspect of privacy is control (hat tip danah). So how do we get control? Some technology, some law. Hardest problem: high frequency, low stakes inquiries. Want to address a less important but under-noticed problem. People making decisions around privacy. Employers make demands for access to employees. (Let us look at your email, let us drug test you.) Pattern in law: agree in advance, not binding. If you agree on the day, binding grant of access. Makes sense from behavioral economics perspective. People think bad stuff won’t happen to them. Second, people tend to be focused on the present. Imminent things are more imposing. Some older cases: consumers would buy on credit, contract would allow home entry for re-posession. Courts would disallow that, but allow re-possession when someone showed up and gotten permission. Last comment: politics of privacy law is unusual. Mentions conservative justice arguing against searching of customs officials, and closing with a hope that customs agents will learn from the respect given to their privacy.

Andrew Adams [link to http://www.personal.rdg.ac.uk/~sis00aaa/ no longer works], Reading. (Suggested reading: Regulating CCTV [link to http://deposit.depot.edina.ac.uk/119/ no longer works] ) Start (and refuting) privacy is for old foggies. In fact, different conception. Research focuses on attitudes towards self-revelation by themselves or others. In the UK, Facebook, MySpace and Bebo have 1/3 of market each. Asked people who they talk to. Principle reason to be online is to increase interaction with people they know in real life. Most UK students live in “laundry belt.” Close enough for trips home for laundry, not so close that parents look over the shoulder. (~2 hour drive). One use of new technology is to stay in touch with friends from high school. Some people reveal too much information. Interviewees don’t say they revealed too much, but some did say that they had been “inappropriate” and revealed extra information about themselves that an employer might see. Wanted more guidance about what to reveal. One thing that came through clearly was that it’s not OK to reveal info that people wouldn’t reveal about themselves. In Japan, systems are Mixi, Mi-Chaneru, Purof. Some openness to making e-only friends. More perceived online anonymity. Concept of “victim responsibility:” your fault for having bad friends.

Comments and questions:

David Livingstone Smith: I’ve heard a number of definitions of privacy, suggests that control of information belonging to self. Christine says that there’s a huge literature on the subject, and offers a thought experiment: a story with your name & information which you don’t want to be known appears on another planet. Has your privacy been violated? (Information doesn’t jump around without a cause.) Allesandro asks if David believes in a single definition of privacy, “no.” Rachel Greenstadt asks about victim responsibility and degree of control. E.G., what about family? Andrew suggests less use of family as SNS friends, and there’s a much greater level of pseudonymity in Japan, “almost everyone.”

Caspar Bowden asks Eric how well hierarchical access control works: does level of escalation matter? Caspar also asks Christine about coersion. Christine says there’s a big country difference. In US there’s employment at will. Peter goes back to access control, and is building an attribute based messaging system. I ask Eric how close we can get to perfect. He suggests that it may be too expensive to get anything close to perfect. Joe Bonneau mentions to Peter than there’s working exploits already available for Green Dam, and asks Alessandro if he’s seen the effect that privacy settings only ratchet up. After his early survey, many surveyees changed their privacy settings.

Andrew Patrick suggests that the frog thing is a myth. Alma asks Christine ‘what happens if the story isn’t true.’ Christine suggests its an interesting question. Andrew Adams says his students are most concerned about incorrect information that might be attributed to them. Jon Callas says that he’s glad danah found people who lied about themselves, because it’s a good technique. If it’s well known there’s lots of falsehoods then you gain plausible deniability. Chrisine says we should try to do more to protect people in other ways: lying is suboptimal, Jon says it may be needed. Alessandro says there are limits. I mention Dan Solove’s Understanding Privacy book (my review), Andrew Adams mentions Future of Reputation.

Chris Soghoian says that if we want to teach kids to obey all rules, Google terms of service forbid use by under 18s, so we should teach our kids to not use Google.